Artificial intelligence and TikTok: how famous female journalists are being systematically used for undisclosed AI generated content

It is easy to steal someone's face, body, and voice and create an AI-generated copy of a person who will speak and move as the creator wants them to.

unmute ⟶

Читати українською

Fake video with a real TV host

Here you see a fake host of the YouTube channel Texty.org.ua, Valeriia Pavlenko.

In the video she said: “I look like Valeria Pavlenko, but in reality, I am an artificially generated video from a photo.”

Next, we will inform you about the content promoted by AI-generated clones of journalists and how to distinguish them from authentic individuals.

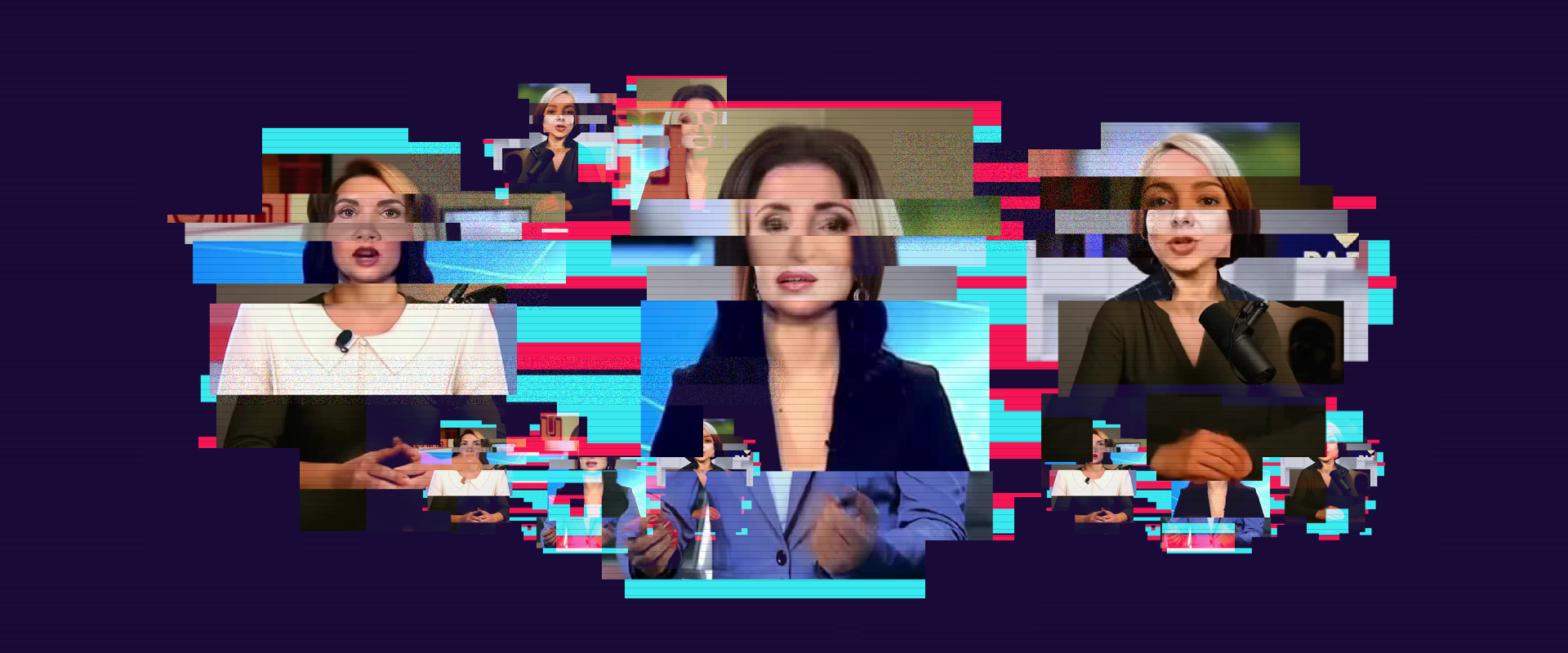

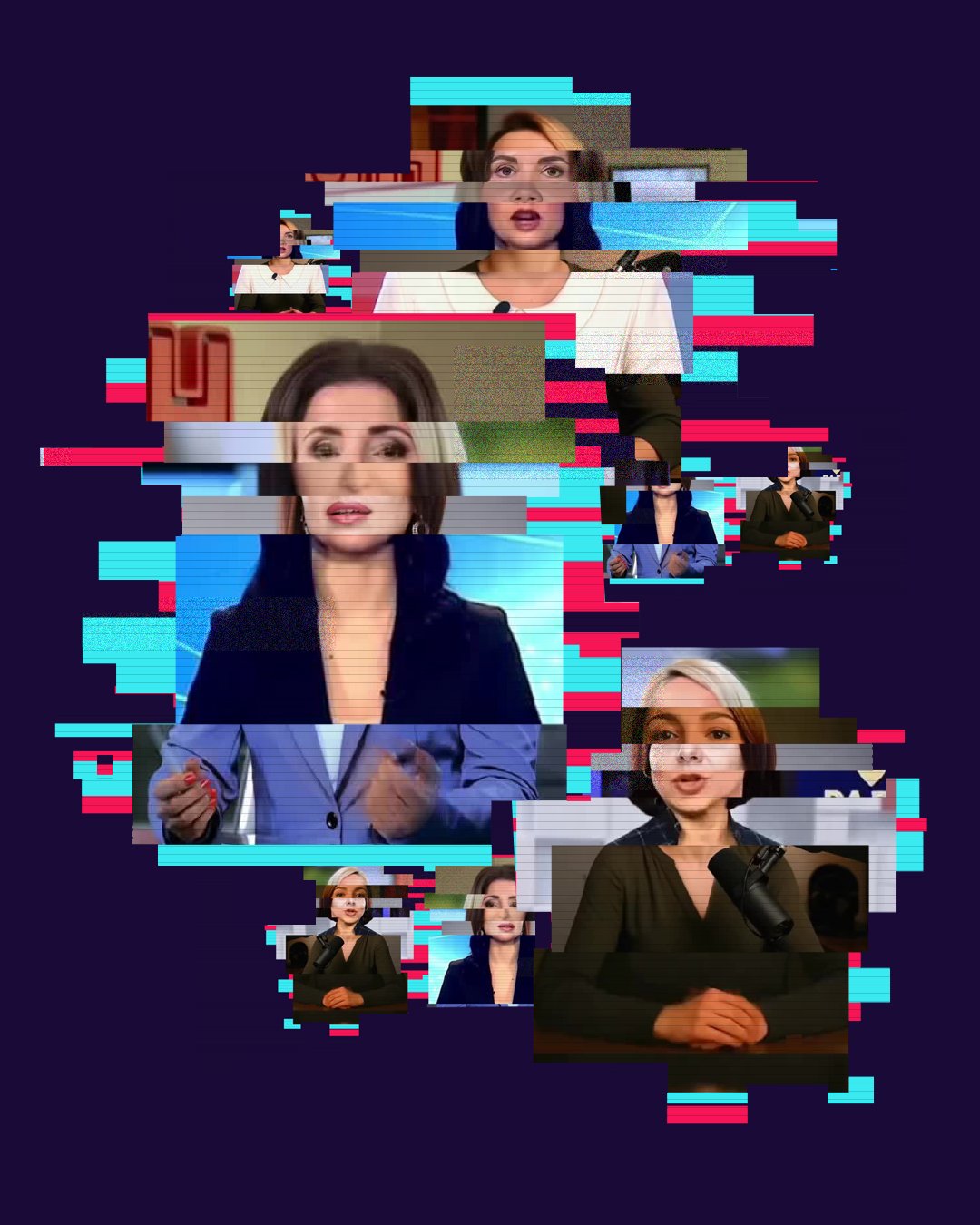

We analyzed 595 AI-generated videos that used the likeness of well-known women. In total, these clips received over 24 million views on TikTok. The number of views and user reactions to some of them is striking.

Brief overview of the investigation

In 2008, Solomiia Vitvitska began her career on the 1+1 television channel, first as a correspondent for TSN (everyday news program) and later as a presenter. Today, Vitvitska is one of the most recognizable faces on 1+1.

However, we were surprised to discover that the TV presenter's image is actively used on TikTok, and not always with her consent. We found 100 videos in which a virtual Vitvitska speaks and acts thanks to artificial intelligence algorithms. In some videos, her voice is generated, while others feature completely false stories with fake facial expressions, synthetic intonation, and disinformation messages.

These are not isolated cases. Ukrainian female journalists are increasingly becoming «digital avatars» — not by their own free will or with their consent, but because manipulators seek to exploit the trust placed in them as a means of influence. What seemed like a technological curiosity a few years ago is now turning into a form of systematic technology facilitated gender based violence (TFGBV). Artificial intelligence has become a tool for discrediting and spreading misinformation.

We decided to investigate how artificial intelligence is used on TikTok to create or distort videos that insult journalists. Our focus is on female journalists, who comprise the majority of Ukraine's media industry in terms of numbers.

During our analysis, we came across dozens of videos where their voices, appearances, or speech patterns were artificially altered or completely AI generated. We investigated the AI technologies being used, the topics of these videos, and the goals those behind them are pursuing.

The videos use various artificial intelligence technologies, from voice synthesis and superimposing it on real images to full-fledged deepfakes with generated images and sound.

Most of the content in our sample showed signs of audio interference. In other words, real news stories were voiced by new voices created to promote a specific message, story, or narrative. Sometimes the voice was artificially modeled after well-known Ukrainian TV presenters, which enhanced the effect of authenticity.

Such audio manipulations often give themselves away. Unnatural pronunciation, incorrect stress, strange pauses, and a broken speech rhythm are all signs of machine-generated speech.

We identified 328 TikTok videos that featured audio manipulation and another 267 videos where artificial intelligence was used to create or modify both the image and the voice.

Data collection and processing. From August 1 to September 30, we searched TikTok for videos featuring women journalists.

To build our dataset, we used the snowball sampling technique. Initially, we searched mainly via the hashtags #journalist, #femalejourmalist, #femalehosts, #TSN, #inter. As the process continued, we discovered videos with additional hashtags and gradually expanded the list. You can find the final list of hashtags used for the search at this link.

From the collected videos, we selected those that contained elements of AI-generated content (audio or video) or AI-modified content.

We stored the necessary information using Python-based TikTok scrapers: we downloaded the videos, their IDs, titles, descriptions, usernames, publication time, and the number of likes and comments.

To check whether the audio was AI-generated, we used the Hiya Deepfake Voice Detector browser extension for Chrome, which analyzes the voice. We labeled the voice as fake if the tool detected a probability of more than 50% that the audio track was inauthentic.

In addition to automated checks, we carried out manual validation of the results. During this process, we further confirmed the presence of AI interference in the videos and grouped the materials according to predefined categories: type of primary AI intervention (voice/video), topic of the video, and the journalist whose image was used.

The visual part of our material was inspired by Bloomberg's project “The Second Trump Presidency, Brought to You by YouTubers”

Methodological limitations

TikTok restricts access to complete data, which affects the comprehensiveness of the sample.

Searching for videos using the snowball technique does not allow us to cover the full volume of materials. It is limited to a clearly defined set of hashtags and does not account for relevant videos marked with hashtags that were not included in the search.

The validation tools used, including those for detecting AI interference (in our case, Hiya Deepfake Voice Detector), may produce errors or false results. Therefore, we additionally applied manual verification of the obtained results.

Exploitation of images

Creators of undisclosed AI generated content often exploit images of well-known Ukrainian journalists and TV presenters. This is a calculated strategy.

Firstly, journalists are associated with trust. Since trust in a message depends on who is broadcasting it, the use of their image is intended to break through the wall of mistrust.

Second, recognizable faces increase views and content reach: users are more likely to respond to familiar media personalities, especially those they often see on TV or YouTube.

Third, manipulators deliberately choose images of females. Female journalists are often associated with empathy, care, and honesty—emotional qualities that enhance trust in a message, even when it is created by an algorithm.

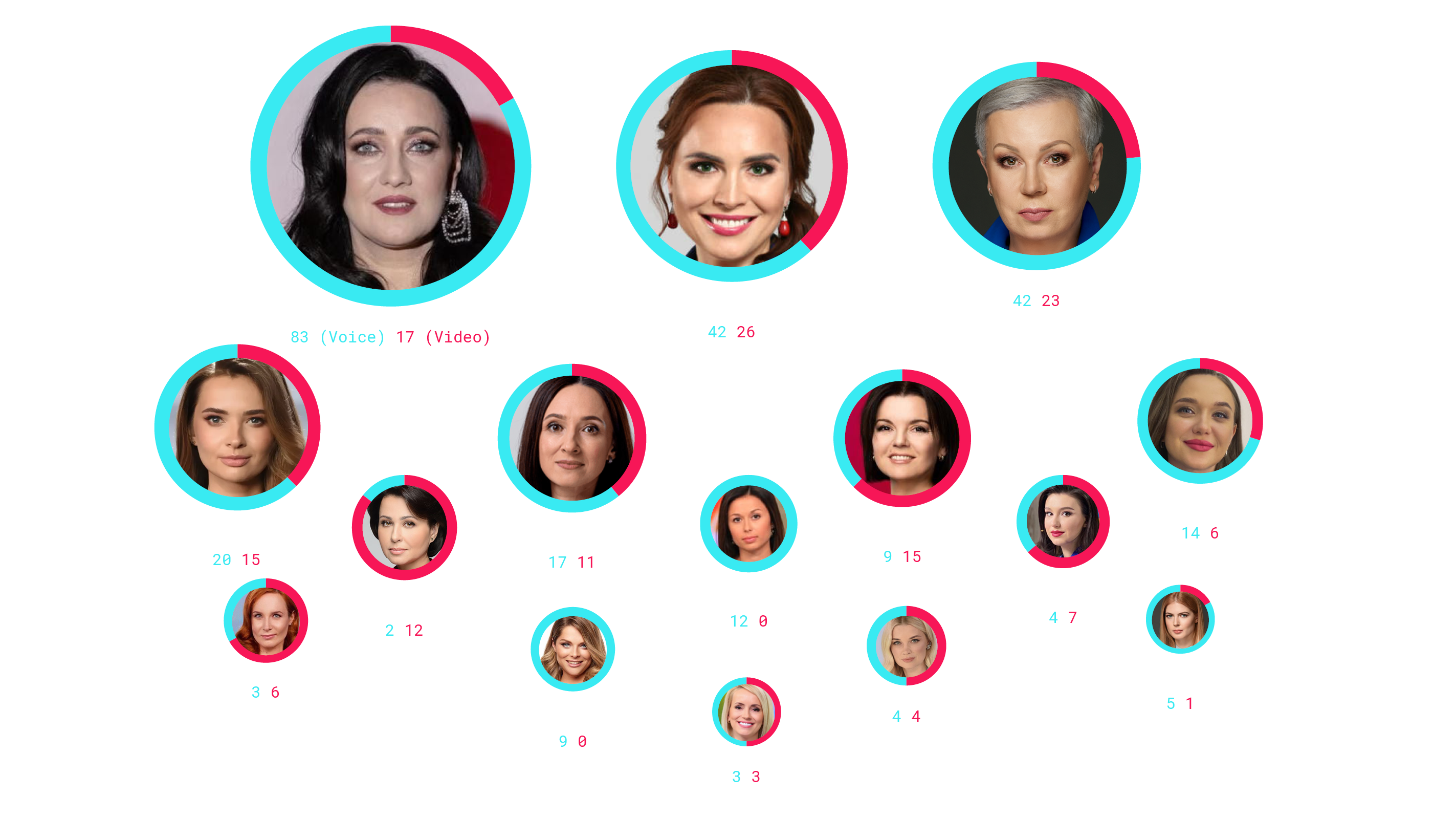

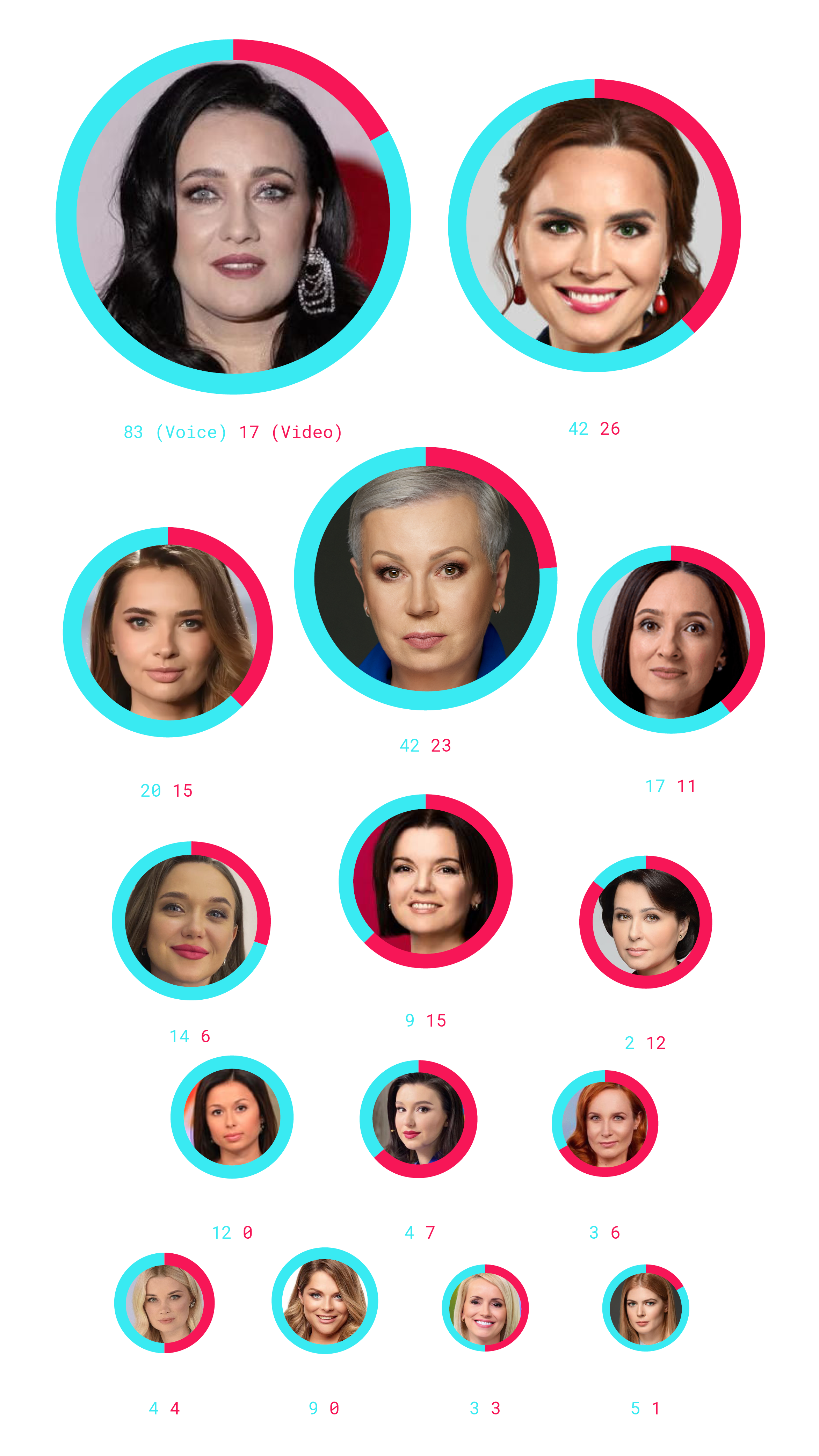

Among the female journalists whose images are used most actively are Solomiia Vitvitska from 1+1 (17 video generations and 83 audio manipulations), Anastasiia Daugule from Inter TV channel (26 video generations and 42 audio manipulations), and Alla Mazur from 1+1 (13 video generations and 42 audio manipulations).

The most popular TV hosts whose images are used in fake videos

These cases have all the hallmarks of technology-facilitated gender-based violence (TFGBV).

Use of a female journalist's image without consent

The video was manipulatively created using AI with the faces and voices of female journalists. This is a form of deception that undermines her professional reputation and creates additional security risks.

Gender vulnerability and discrediting

Female journalists are often targeted in such attacks precisely because they are highly public figures and trusted by their audience. The use of a female image reinforces the effect of trust, but at the same time discredits her as a person and as a professional. This is an element of gender discrimination: it is not just a journalist who is being attacked, but specifically a woman in the media.

Digital violence and invasion of privacy

Imposing fake content on behalf of a journalist is a form of online violence that can lead to a loss of audience trust, threats on social media, or secondary victimization. She loses control over her image, which is then used as a tool for fraud.

Broader impact on other women

Such cases create a «chilling effect» for other female journalists: they see that they could be the next victims of deepfake manipulation, and this exacerbates the atmosphere of fear, self-censorship, and refusal to actively participate in public life.

The source here.

In total, the videos from our dataset have garnered over 24 million views. The number of views and reactions from social media users to individual videos is impressive.

The most popular fake videos

For example, on May 17 2025, the user profile coaxinyxhzq posted a video (#petition #ukraine # news #laws #ukraine above all💙💛). As of October 15, the video has already been deleted. However, at the time of recording, it had garnered nearly 670,000 views, over 28,500 likes, 2,153 reposts, and over 1,600 comments.

In the video, the fake Alla Mazur claims that it is possible to sign an initiative supporting member of the Ukrainian Parliament Oleksii Honcharenko's proposal to mobilize 500,000 police officers.

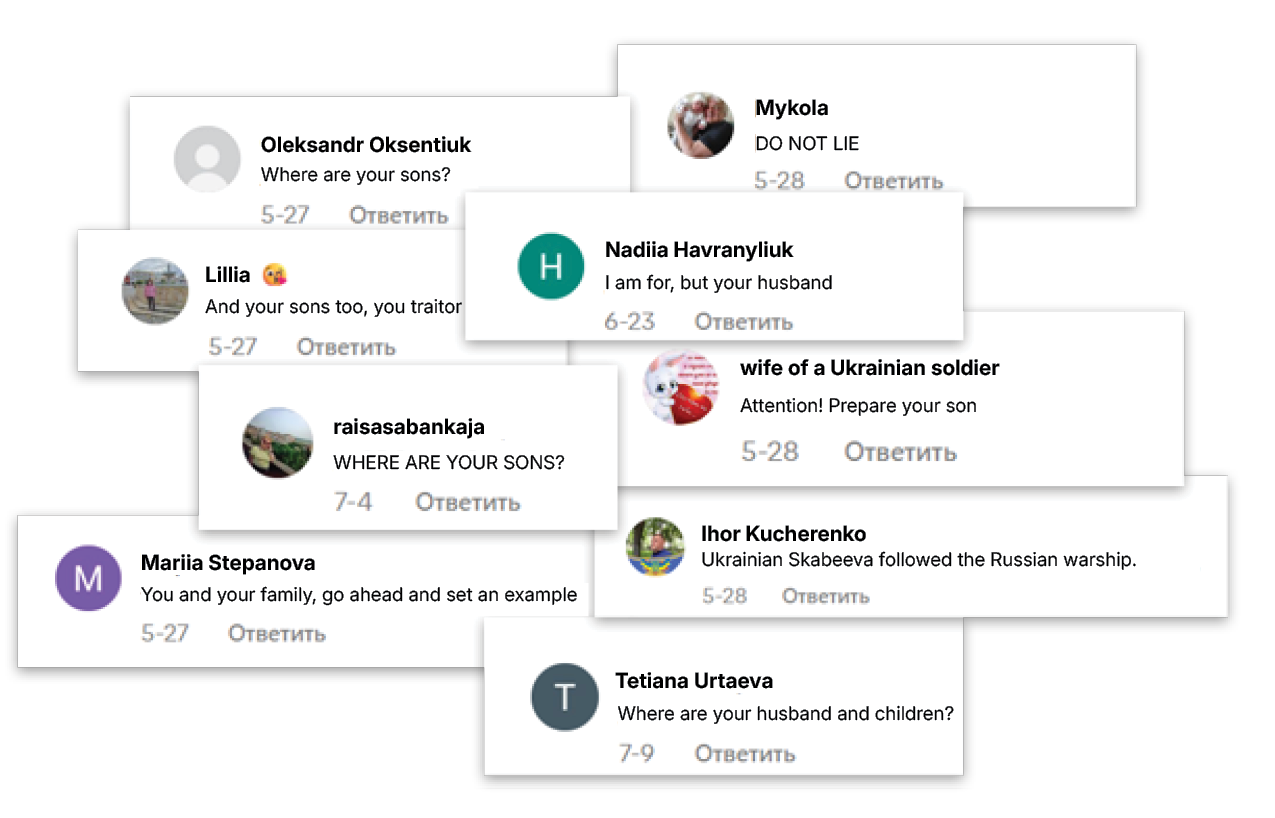

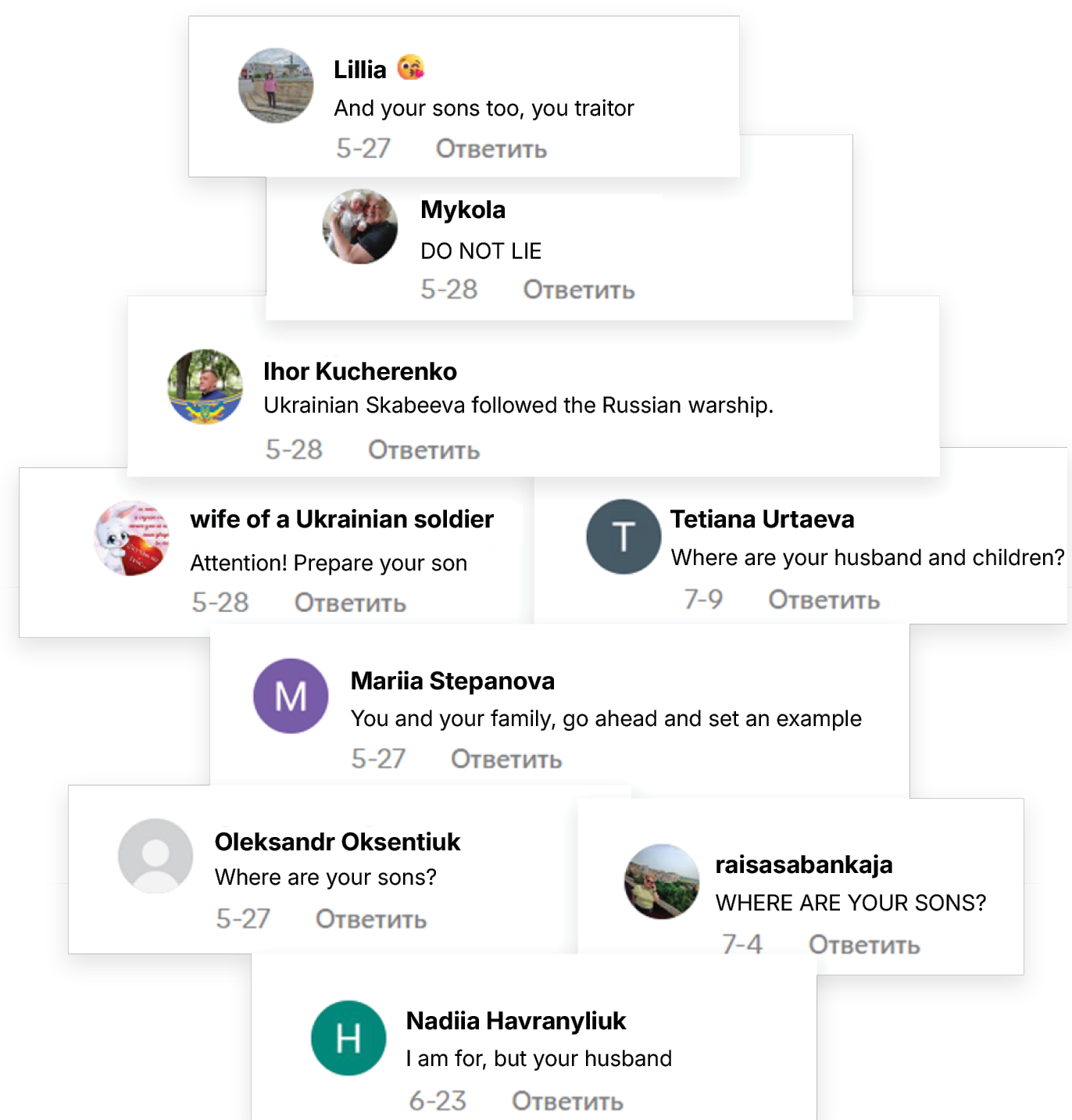

A video featuring a fake Natalia Moseychuk on the tsn11ua2 user profile published on May 27, 2025 has garnered 517,000 views, over 7,700 likes, 226 reposts, and 258 comments. It states that a petition has been created to send police officers and employees of the Recruiting office to the front instead of “ordinary Ukrainians”.

A video featuring Alla Mazur and an AI-generated voice discussing the conditions for ending the war, published on May 10, 2025, on the ukr_news52 channel, has garnered over 415,000 views and 2,381 reposts.

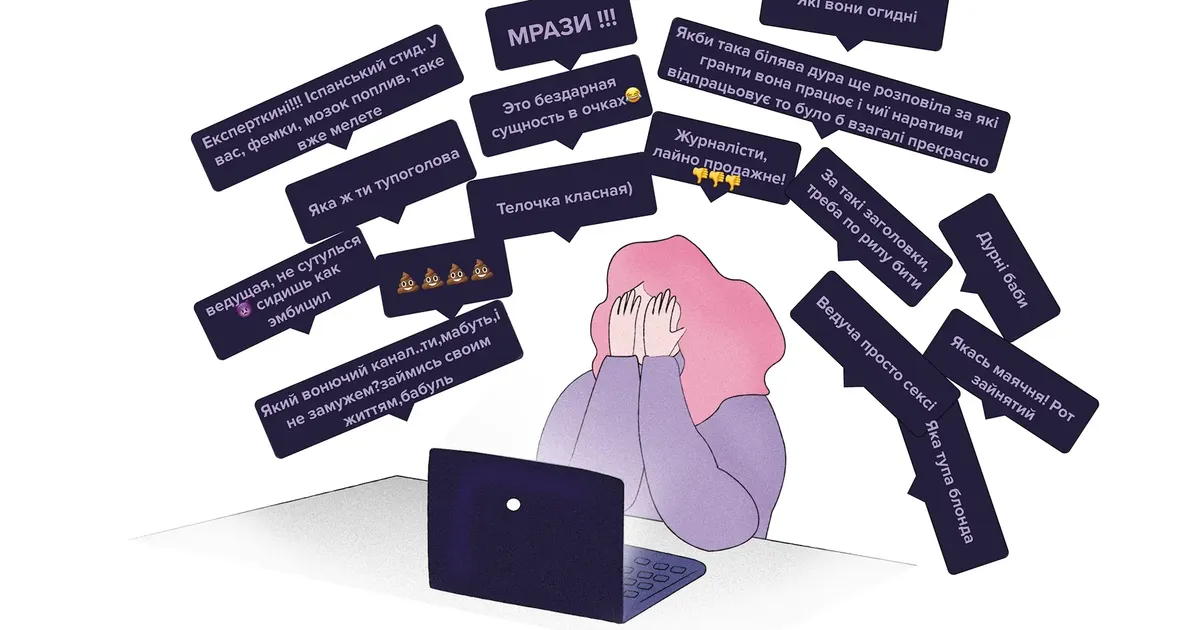

Such content affects the journalist's reputation and how she is perceived by her audience. People often confuse the real journalist with her AI copy and start hating on the person for what her fraudulent copy says.

For example, users who watched the above-mentioned video with the fake Moseychuk wrote that she was a traitor, hiding her children and husband from mobilization.

Such comments may also be part of a targeted smear campaign and may not belong to real users.

Comments under the fake video

It is worth noting that those who exploit the images of popular TV presenters also copy well-known media brands. The names of TikTok accounts used to promote fake journalists often contained elements that evoked associations with legitimate news sources. For example, the suffix tsn (popular daily programme in Ukraine with the name “Television News Service”) appeared in at least fifteen accounts: tsn.news.ua1, tsn_.news11, tsn.news.24.7, tsn1.news, etc. Such imitation was intended to create an additional illusion of trust in the distributors of video content.

What fake journalists promote

False AI generated images and videos of female journalists are weaponized for disinformation for purposes — from anti-Ukrainian and destructive narratives to commercial ones, calling to subscribe to channels. We have identified several main groups:

The most common topics of fake videos

268

145

116

22

18

26

The extent to which the creation of digital avatars has been naturalized in social media, is demonstrated by the open advertising of the relevant technologies. For instance, the TikTok account garant_konstitucy, deepfakes have become a commodity: here you can order an artificially generated greeting or prank. For an additional fee, the producers of undisclosed AI-generated content are willing to teach clients how to «make high-quality deepfakes.»

The videos on the channel contain warnings that they are deepfakes. However, the question remains: did the authors obtain consent to use the images of popular TV presenters?

However, in the posts we analyzed, most of the people behind the creation of AI-generated images and videos impersonating real people tried to hide the fact that these were not real testimonies.

The largest number of videos in our set that use AI contain a call to sign a petition or take a survey — 268 (45%).

For example, «popular TV presenters» talk about a petition that will:

- return Zaluzhny to the post of commander-in-chief;

- lead to Zelensky's resignation or make pro-Russian politician Yevhen Murayev president;

- send all police officers and employees of the Recruiting office to the front;

- help confiscate the property of top officials or Yulia Tymoshenko;

- force member of the Ukrainian Parliament Mariana Bezuhla to go to the frontline.

At first glance, these messages seem random. However, their structure clearly repeats the usual tactics of Russian disinformation: appealing to emotions, stirring up controversy, and undermining trust in the state.

For example, the question of Valerii Zaluzhny's possible return to the post of commander-in-chief, although it has no real basis, provokes controversy and strong emotions. The promotion of Yevhen Muraiev is expected: he is a figure whom the Russian media has repeatedly tried to legitimize.

Another thesis — about sending all police officers to the front — also sounds unrealistic. However, it is actively promoted by sources that spread Russian narratives in Ukraine and, unfortunately, resonates with part of society.

The idea of confiscating the property of high-ranking officials is another tool for increasing social polarization, designed to separate the state from its citizens.

In such videos, the producers of these AI undisclosed generated content encourage viewers to follow the links provided in the post, to support the initiative, or to participate in the vote. In practice, this means only one thing: the user goes to third-party resources, subscribes to fake accounts, or, even worse, transfers their personal data to malicious actors.

Fake videos calling for people to sign petitions or take surveys

13.05.2025

Channel: ukrnews_today

Petition for the mobilisation of police officers

Number of views: 64,600

15.07.2025

Channel: interesting_petitions_ua

Petition to send employees of the Recruiting office to the front lines

Number of views: 352,900

08.05.2025

Channel: news_ua_tsn

Petition to cancel bonuses for the employees of the Recruiting office and police employees for the mobilisation

Number of views: 270,100

15.05.2025

Channel: online__petition

Petition for Stefanchuk's resignation

Number of views: 129,200

07.04.2025

Channel: news.in.ua2025

Petition for Zaluzhny's reinstatement and Syrsky's dismissal

Number of views: 1567

Almost every fourth video we found with AI interference exploits images of female journalists for a similar purpose — to obtain user data and increase the audience of their resources with fake news about social assistance. We found 145 such videos (24%).

A false generated AI video shows journalists Nataliia Ostrovskaya and Iryna Prokofieva (TV presenters on the 1+1 channel) saying that Ukrainians can receive allowances, pensions, or payments if they fill out the appropriate forms. The appeals are often targeted at specific categories: pensioners, parents, teachers, and those who remained in the country after the start of the large-scale invasion. The payments are allegedly made by various organizations, including the UN, the Red Cross, the EU, Canada, the US, state banks, and the president himself.

Fake videos about social care

15.05.2025

Channel: ukrnews_today

Receive funds confiscated from the commander-in-chief of the Recruiting office

Number of views: 15,000

12.03.2025

Channel: maratcppl9g

Receive funds from the UN

Number of views: 11,800

29.12.2024

Channel: gymanitarka.ua

Receive humanitarian aid

Number of views: 3543

16.03.2025

Channel: newsnow673

Receive assistance from the Red Cross

Number of views: 2238

01.04.2025

Channel: maratcppl9g

Receive funds from the UN

Number of views: 3905

Almost 20% of videos (116) contain narratives that are destructive or anti-Ukrainian. Most often, this content is about the horrors of mobilization, illegal actions by law enforcement agencies, and the discrediting of the government and military-political leadership. Such videos claim that Ukraine's neighbors are allegedly planning to occupy the western regions or that peace will come in the near future — all you have to do is subscribe to social media to find out the date.

Speculation on current events happens instantly. For example, on July 19, 2024, political and public figure Iryna Farion was killed, and within a few days, AI-generated voices superimposed on real news reports were spreading fake news on TikTok that the killer was a relative of Farion, that the wrong person had been arrested, or that there was real footage of the tragedy.

Fake videos with destructive narratives

07.05.2025

Channel: terminovinews8

New mobilisation rules

Number of views: 324,200

29.05.2025

Channel: news_0f_ukra1ne

Men will be forced to return from abroad

Number of views: 234,600

06.05.2025

Channel: newsukr93

The employees of the Recruiting office were given more powers

Number of views: 148,300

23.05.2025

Channel: tsn11ua2

Advancement of Russians in the Dnipropetrovsk region

Number of views: 62,900

15.05.2025

Channel: ukrnews_today

Talks between Putin and Zelensky begin

Number of views: 16,000

How to distinguish between real and fake videos

OSINT expert Hank van Ess notes: «The arms race between AI creators and detectors continues, and currently, the creators have the upper hand in terms of speed. Determining what is a deepfake and what is not is turning into a game of cat and mouse, and developers are constantly improving the technology.»

When checking, it is important to pay attention to warning signs, especially if identification needs to be done quickly. For example, the expert points out the following points for a quick «visual» check.

Check for perfection: does the person look too elegant, too perfect for the situation?

Check the skin in real conditions: is the skin too smooth, is there noticeable retouching where there should be natural texture? Pay attention to the natural texture of the skin, pores, and minor asymmetries.

Overall assessment of appearance: Does the appearance match the scenario?

Assessment of the physical properties of clothing: are there natural folds, signs of wear?

Analysis of hair strands: Are individual hairs visible, or does it look like a painted/glossy image?

Realism of jewelry and accessories: are the objects three-dimensional or flat, like in computer graphics?

Teeth review: are there natural imperfections, or does everything look perfect and uniform?

Regarding voice and audio processing. Voice cloning technology enables the reproduction of any person's voice in just a few seconds of audio recording. However, there are still noticeable traces of artificial origin in speech patterns, emotional authenticity, and acoustic characteristics.

Despite their impressive accuracy, synthetic voices are still unable to convey the subtle human characteristics that make speech truly authentic.

Typical signs of artificial sound include:

- unnatural lip movement;

- flawless pronunciation, devoid of the imperfections inherent in human speech;

- robotic intonation of individual words or phrases;

- absence of natural background noise;

- phrases or terms that a person would be unlikely to use in real life.

- Let's conduct a brief analysis of the presence of AI-generated content in one video from our collection.

Let’s do a quick surface-level analysis of one of the videos from our dataset.

It is highly probable that the image of Solomiia Vitvitska was used as the basis for the deepfake. A video posted in TikTok on xxxx claimed that a new petition has emerged and is spreading, allegedly obliging politicians and officials to mobilize the Ukrainian army.

However, it immediately becomes clear that this is undisclosed AI generated content: just look closely at the «host's» hands. In particular, in the first second, you can see a notebook disappear from her left hand.

At the 3-second mark, the presenter's gaze is unnaturally directed somewhere to the side, rather than at the camera. Additionally, the folder (tablet) at the bottom reflects light, creating an abnormal shadow.

It can be seen that the host's hair moves unnaturally: in different parts of the video, individual hair strands appear and disappear.

At second 17, the «host» bends her back unnaturally, which is typical for deepfake generation. You can also notice the lip articulation characteristic of AI generation.

At second 21, we see that the lighting on the face does not always match the lighting in the background. The «host’s» face appears overly smooth, especially in the forehead and eyebrow area; the skin texture looks unnatural.

How to recognize a fake video

Throughout the entire video, the «host» moves her hands in an unnatural manner. In several frames, her eyes appear to be “hanging” in space — not focusing on the camera. The background looks artificial. The audio track also exhibits signs of artificial generation, including robotic intonation, incorrect stress and pauses, and occasionally a mismatch between the spoken words and the lip movements.

It is necessary to evaluate not only the audiovisual component, but also the content of the messages. Phrases such as "everyone is silent that...", "Ukrainians, have you heard...", vocabulary with elements of opposition "us — they "or", “ordinary Ukrainians — the authorities”,"lack of specifics ("a well-known doctor without a name made a loud statement "), as well as the absence of references to sources ("most Ukrainians did not know about this") — all these are typical manipulation techniques aimed at evoking an emotional response and retaining the user's attention.

Instead of a conclusion

In its new study, the non-governmental organization Women in Media notes that cases of AI technology being used to organize online attacks on female journalists are not yet widespread. However, such incidents are already being recorded, and with the development of technology, their scale may grow rapidly.

A survey of female journalists revealed that out of 119 respondents, one in fifteen (7%) had already experienced online attacks generated with the assistance of AI. Another 16% had witnessed such attacks against their colleagues. Female journalists and media executives, serving as public representatives of editorial offices, are often the primary targets.

Additionally, 43% of respondents reported seeing AI-generated content at least once a week, indicating the growing presence of such material in the media landscape.

What our research showed

Digital avatars are becoming a tool of violence. AI makes it possible to create realistic copies of female journalists who can be manipulated to say or do things that never actually happened, thereby undermining their reputation and safety.

The reputational and psychological consequences are severe. Videos with fake voices and faces of female journalists provoke cyberbullying and online hate and create a chilling effect for other women in the media.

AI accelerates disinformation. Thanks to TikTok’s algorithms, AI-generated videos quickly gain millions of views, making verification and countering fakes much more difficult.

A new form of digital violence. The hijacking of journalists’ likeness and voice is a deliberate tool of manipulation that combines online violence, discreditation, and potential risks to national security.

TikTok has formally committed to ensuring transparency for content created or significantly altered by artificial intelligence. The platform's policies require such videos and images to be clearly labeled either by the user or automatically through the Content Credentials system. In theory, this should prevent the spread of synthetic content. It is also prohibited to create content that imitates real people without their consent, misleads, or spreads misinformation.

In practice, however, compliance with these rules is inconsistent. Our research found that numerous AI-generated videos featuring real journalists were published without proper labeling, sometimes containing misinformation or insults. TikTok's policies are often ignored, calling into question the effectiveness of protecting users from synthetic content and coordinated inauthentic behavior.

Although TikTok is investing in technologies to detect and inform users, the gap between policy and reality suggests that promises of transparency remain largely declarative.

Based on our research, as well as UNESCO's Guidelines for the Regulation of Digital Platforms, we have prepared a series of recommendations.

For governments and public institutions

- Develop national strategies for regulating digital platforms, taking into account existing local and international norms.

- Ensure a balance between self-regulation, co-regulation, and statutory regulation to comprehensively cover various aspects of the digital environment.

- Involve all key stakeholders: the state, platforms, civil society, the media, academics, and independent experts.

- Establish requirements for platforms to report transparently on content moderation and removal, as well as on human rights compliance.

- Require platforms to create easily accessible systems for reporting policy and human rights violations, with a particular focus on children and vulnerable groups.

For users

- Use platform tools to regulate content and personalize recommendations.

- Critically evaluate information and sources, verify the reliability of materials.

- Know your rights on the platform and use appeal mechanisms if content has been restricted or removed.

- Report violations of platform policies through available complaint channels.

- Learn to critically perceive content and use digital services safely.

For victims of online violence

- Use special tools to report threatening or violent content.

- Contact moderators or independent organizations for quick resolution of the problem.

- Seek support from specialized organizations, human rights groups, or psychological services.

For digital platforms

- Implement systems to assess risks and impacts on human rights and gender equality. Ensure that product design and content moderation take into account international human rights standards.

- Publicly disclose moderation policies, algorithms, and content restrictions. Explain to users the reasons for removing or restricting content and provide opportunities for appeal.

- Ensure the effective operation of automated and manual moderation systems, including verification of accuracy and non-discrimination. Quickly remove dangerous content.

- Communicate community standards and appeal processes in clear language and accessible formats.

- Support users' information and media literacy, including safe interaction with the platform and critical evaluation of content.

This material was prepared by Texty.org.ua and the NGO “Women in Media” in partnership with UNESCO and with the support of Japan. The authors are responsible for the choice and presentation of the facts contained in this material and for the opinions expressed herein, which do not necessarily reflect the views of UNESCO and do not place any obligations on the Organization.