Carousel of Emotions. Manipulation Level of Ukrainian Telegram Channels

Carousel of emotions

Manipulation Level of Ukrainian

Telegram Channels

We have trained a large language model to detect the most common manipulation techniques in Telegram channel posts.

Note that we did not analyze the content of the posts. We analyzed the structure of the language and determined the techniques that make the text more emotional, thus diverting attention from facts and evidence. We use "manipulative" in this sense.

When we scroll through the feed in Telegram, someone is trying to influence us with various tricks and techniques. The language of Telegram, at least for most channels, is different from the language of online media. It is harsher and more emotional, and the pieces themselves are shorter, often lacking context or evidence to support the points being made.

Here's an example where the tone is set from the very first word and tuned to emotional perception using the “loaded language” technique:

Examples of posts

Fuck! [instantly evokes emotions]

You can die for the country speaking Russian, but you CAN'T live in it speaking Russian [manipulative simplified opposition, the task is to cause a sense of injustice]

Perhaps the authors imitate colloquial speech and try to write as close to the language of their audience as possible, the way friends speak when they share news. But as a result, we have the emotional cheating of hundreds of thousands of readers.

While our "precious darlings" [discredits and causes contempt] are raising 100 hryvnias each to buy cars for the defenders of Ukraine, this vatnik-bastard, a friend of the potato fuhrer [aggressive vocabulary creates a negative image...], buys herself a luxury G-Wagen with money acquired through crime [...and reduces the criticality of information perception]

Words with a strong emotional connotation affect the way we perceive information. When we are outraged, we may not notice that there is a lack of evidence. The same is true when we are happy.

However, positive emotions are also used in other cases. Frequently used manipulation techniques include euphoria (creating a feeling of excessive happiness to, for example, boost morale or spread a particular program or ideology) and glittering generalities (an attempt to evoke strong emotional reactions and a sense of solidarity through abstract concepts such as “freedom,” “justice,” “patriotism,” etc.)

Examples of posts

The General Staff managed to carry out the operation to capture Avdiivka with minimal losses, it will go down in the textbooks of the Ministry of Defense — Shoigu [euphoria for Russians: distortion of information about losses, heroization of the Russian military, creation of an ideal picture of the professionalism of the Russian army]

Let's start the day with positive news! [causes emotional uplift]

On russia [intentional use of the incorrect preposition conveys disdain], they decided to please the mothers of cannon fodder [emotional words] and announced an increase in the production of the Lada car! Avtovaz expects to start delivering cars to more than 300 dealerships in May. The Ukrainian counteroffensive is being planned just as the new Lada is being planned. It's all a coincidence! [disdain and ridicule of the enemy leads to underestimation of its capabilities and downplaying of the threat]

Sometimes, excessive emotions can be useful: they boost morale and increase our psychological stability and endurance in the long war against Russia. But needless to say, frequent appeals to them distort the perception of reality.

Victory may not come in two or three weeks. After such an emotional uplift, when a person is confronted with a harsh reality, they are overwhelmed by confusion, panic, and even depression. One loses the efficiency and prudence necessary to survive a war.

However, there are also more destructive techniques, such as appeal to fear and attempts to sow doubt. Russian propaganda often uses these techniques. With their help, manipulators try to use existing fear and prejudice in their favor.

Examples of posts

Ukrainians will struggle to survive: [emotional words] Podolyak said that the country will face a severe [emotional word] winter. Of course [suggest that this is an obvious statement that does not require proofs], neither Podoliak, nor Zelenskyy, nor other Ukrainian officials will freeze in their warm offices [the goal is to increase fear, sow a sense of abandonment and hopelessness, and use the traditional Russian propaganda opposition between the government and the people to reduce Ukraine's military capabilities]

How and why is real estate going to be [impersonal - who is the one going?] taken away from the people of Ukraine [generalization with suggestive opposition of an abstract government to an abstract people]

Against the backdrop of the catastrophic deterioration of the well-being of the Ukrainian people in their own country due to the war, migration and sweeping mobilization [emotional words] Ukrainians were shocked by another piece of news. It turns out that Ukraine will legalize the alienation of real estate from citizens for the reconstruction of the country [create a sense of hopelessness and danger, sow distrust in the authorities due to possible abuses]

…

- Loaded language: The use of words and phrases with a strong emotional connotation (positive or negative) to influence the audience.

- Glittering generalities: Exploitation of people's positive attitude towards such abstract concepts as “justice,” “freedom,” “democracy,” “patriotism,” “peace,” “happiness,” “love,” “truth,” “order,” etc. These words and phrases are intended to provoke strong emotional reactions and feelings of solidarity without providing specific information or arguments.

- Euphoria: Using an event that causes euphoria or a feeling of happiness, or a positive event to boost morale. This manipulation is often used by Ukrainians for Ukrainians in order to mobilize the population.

- Appeal to fear: The misuse of fear (often based on stereotypes or prejudices) to support a particular proposal.

- FUD: fear, uncertainty and doubt: Presenting information about something or someone in such a way as to sow uncertainty and doubt in the audience, to cause fear of it. This technique is a type of appeal to fear. We have divided it into subtypes: doubts about the Ukrainian government, the Defense Forces, reliable sources of information, assistance from international partners, and other types of doubts.

- Bandwagon/Appeal to people: An attempt to persuade the target audience to join and take action because “others are doing the same thing.” This technique is based on the fact that a certain statement or idea is considered correct because all or most people believe it to be so. And the majority supposedly cannot be wrong.

- Thought-terminating cliché: Short, commonly used phrases that are intended to mitigate cognitive dissonance and block critical thinking. These are usually short, generalized sentences that offer seemingly simple answers to complex questions or distract attention from other lines of thought.

- Whataboutism: Discrediting the opponent's position, accusing them of hypocrisy without directly refuting or denying their arguments, which involves responding to criticism or a question in the format “What about...?”

- Cherry picking: Selective use of only those data or facts that support a particular hypothesis or conclusion and ignore counterarguments or objections.

- Straw man: Distortion of the opponent's position by replacing the thesis with an outwardly similar one (straw man) and refuting it without refuting the original position.

How often do we encounter manipulative vocabulary?

Note: Out of ten techniques, the language model identified five most accurately: loaded language, glittering generalities, euphoria; fear, uncertainty, and doubt; and appeal to fear. We have further combined similar techniques: glittering generalities with euphoria and fear appeal with fear, uncertainty, and doubt.

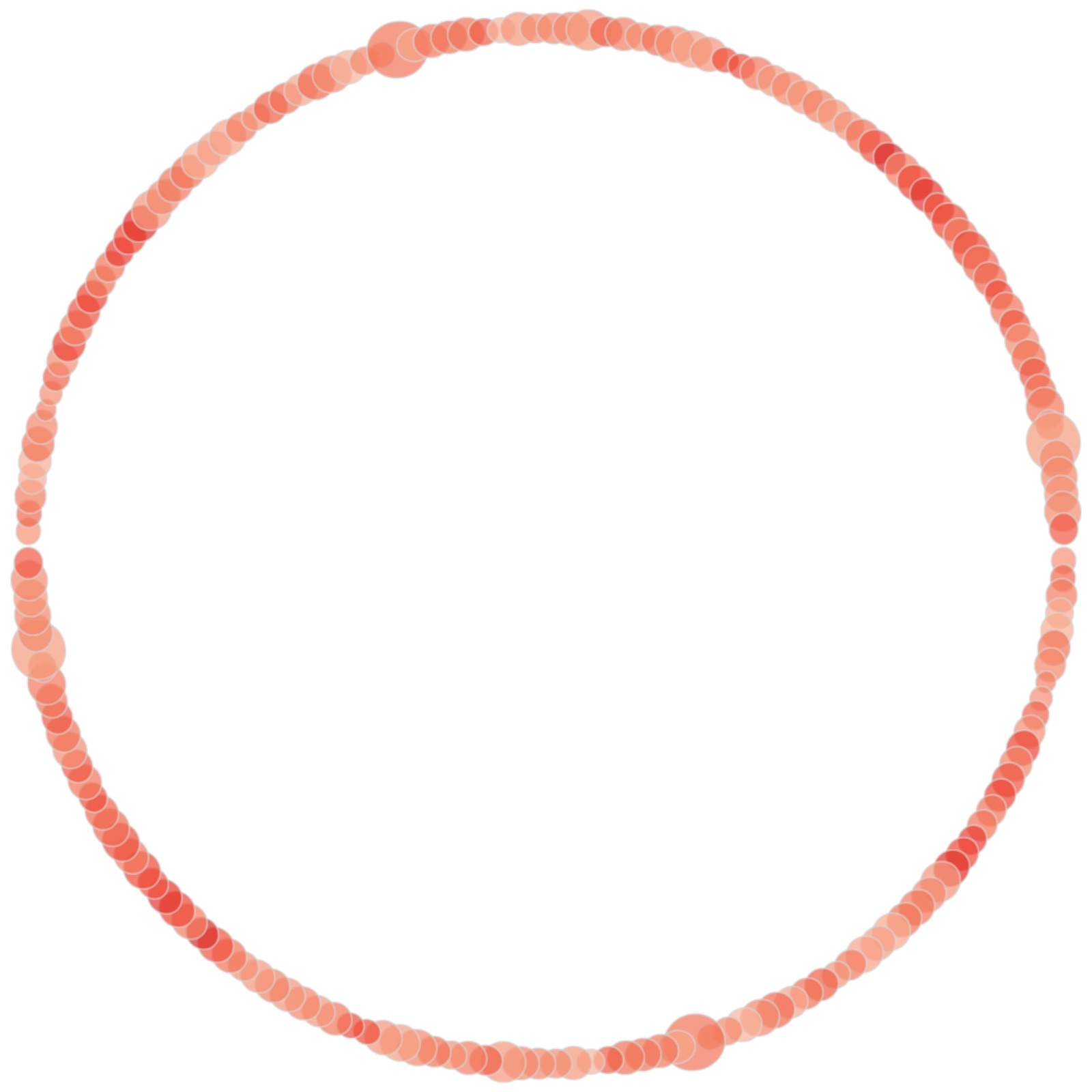

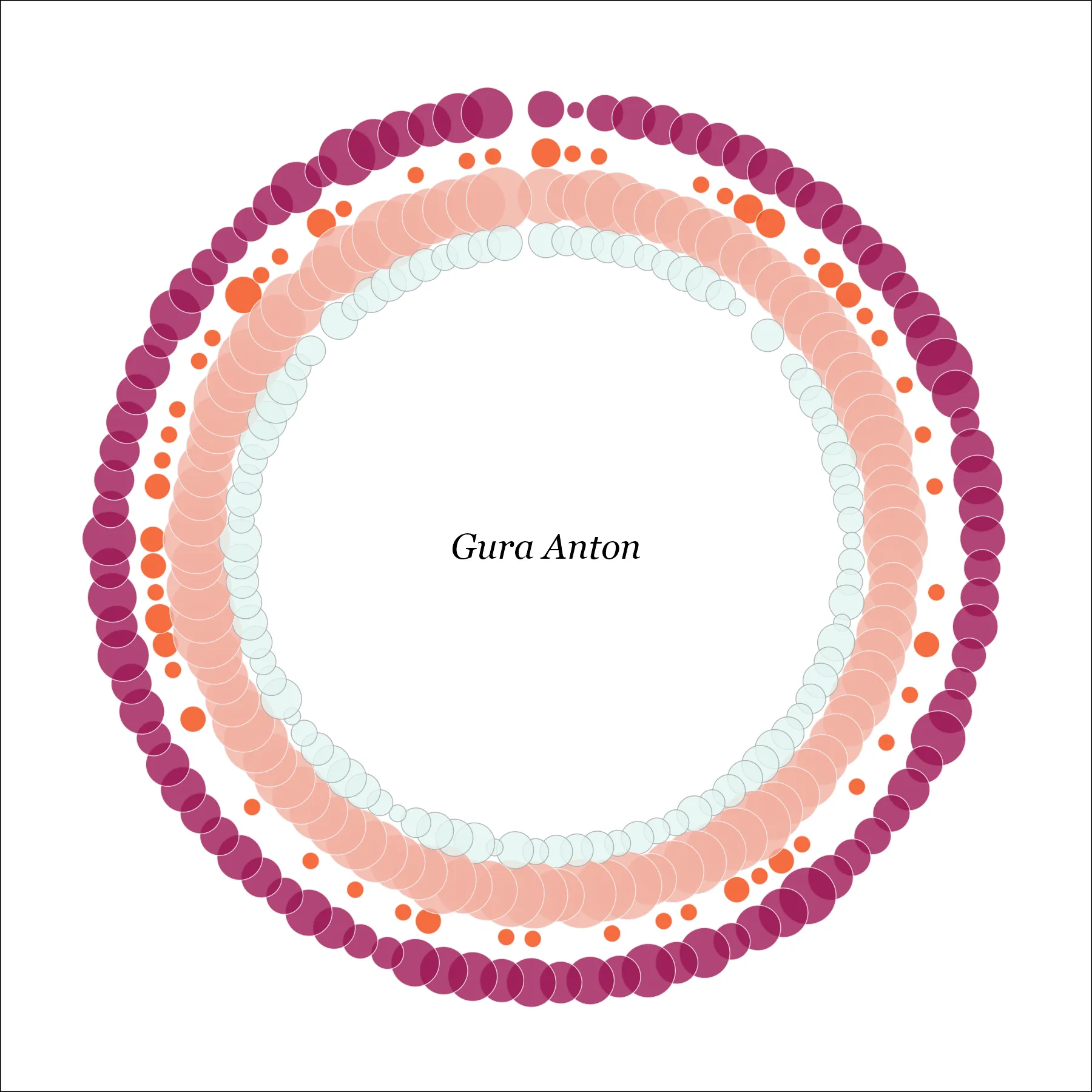

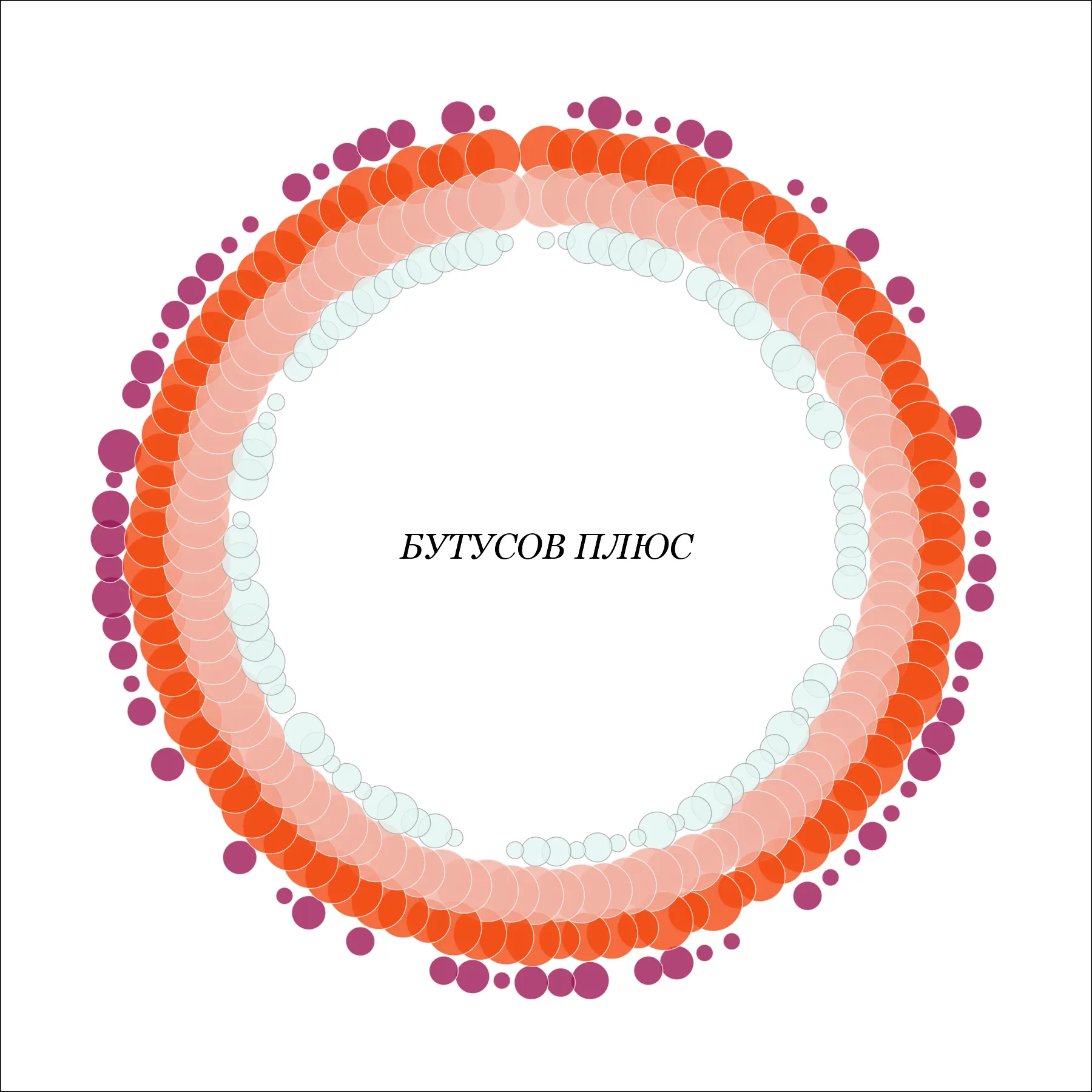

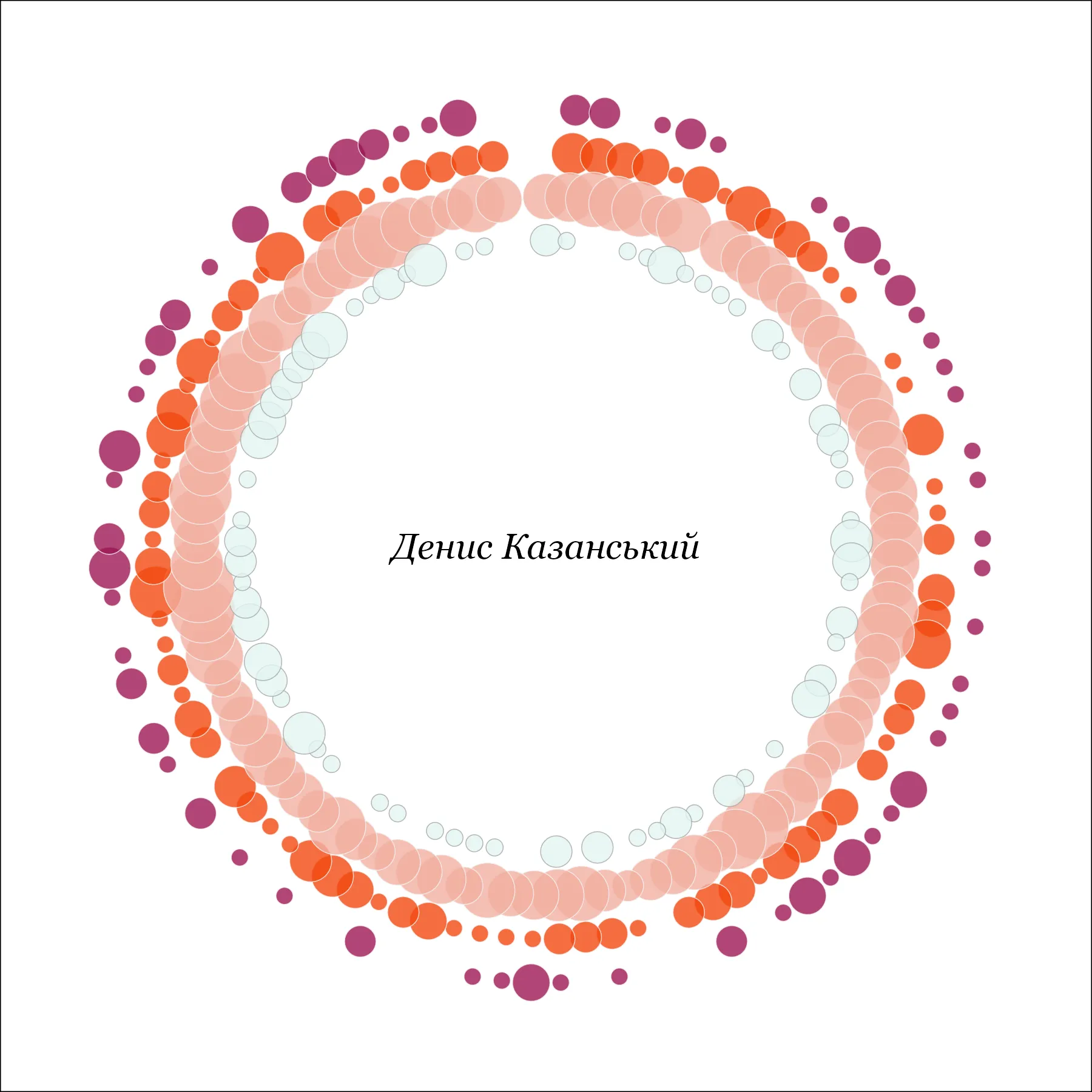

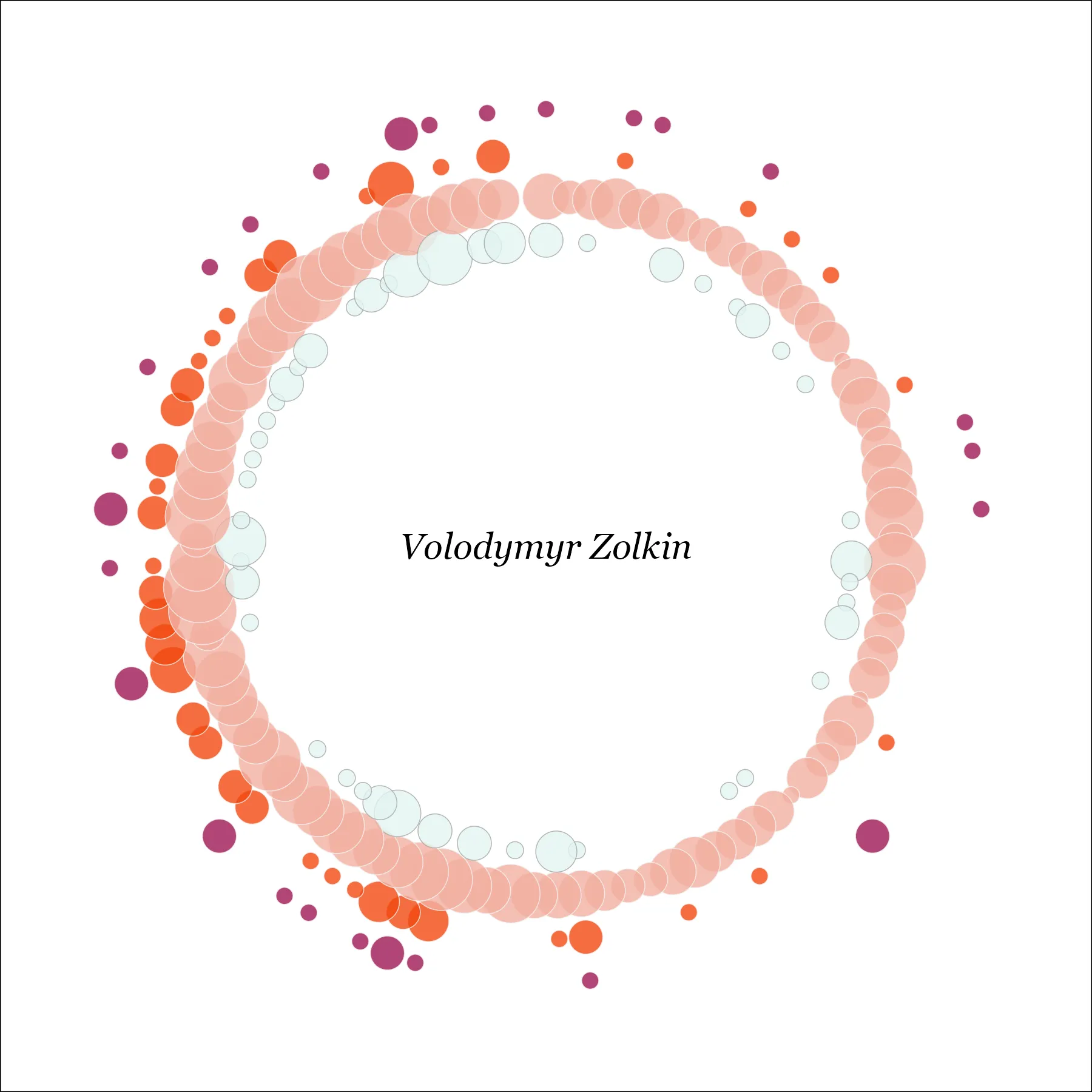

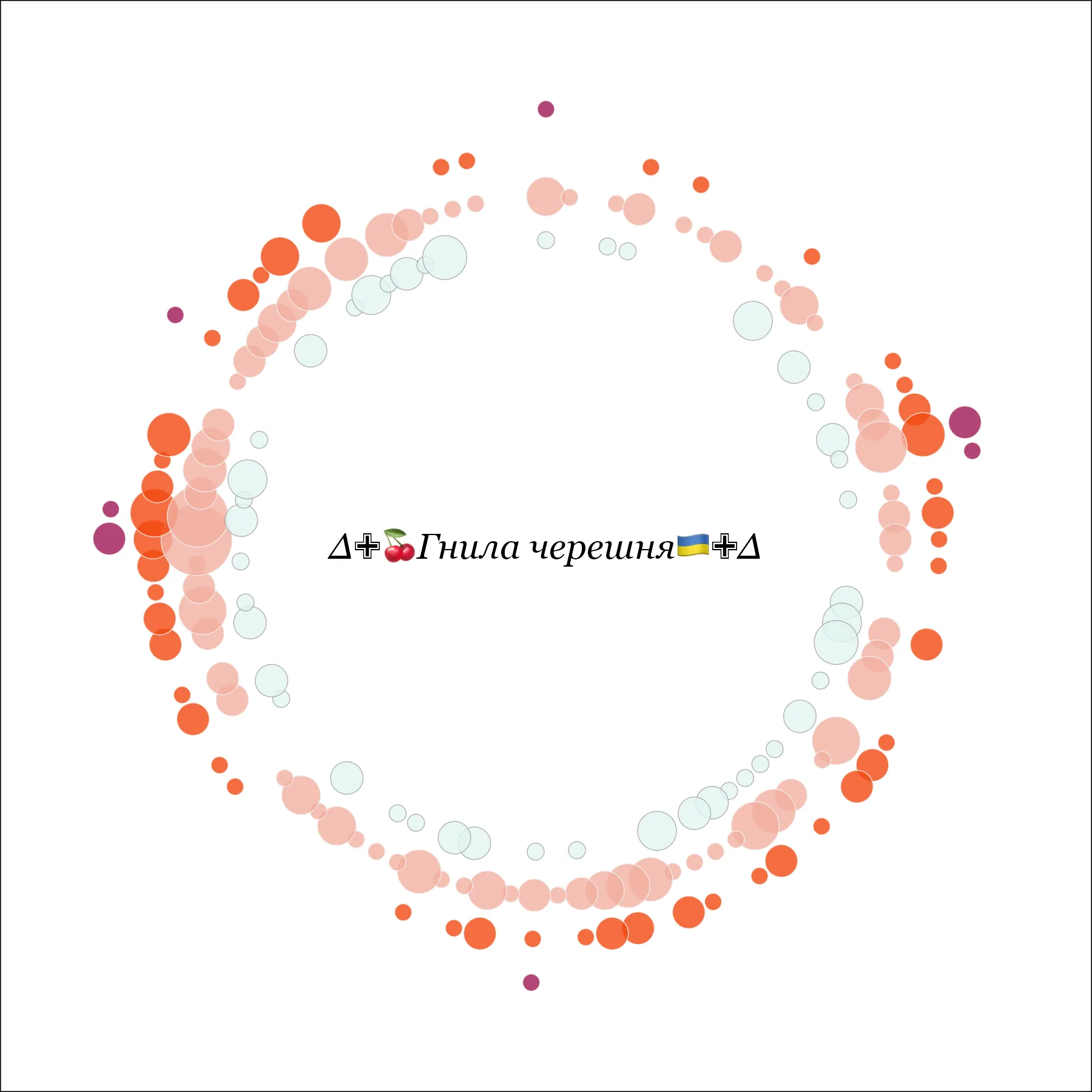

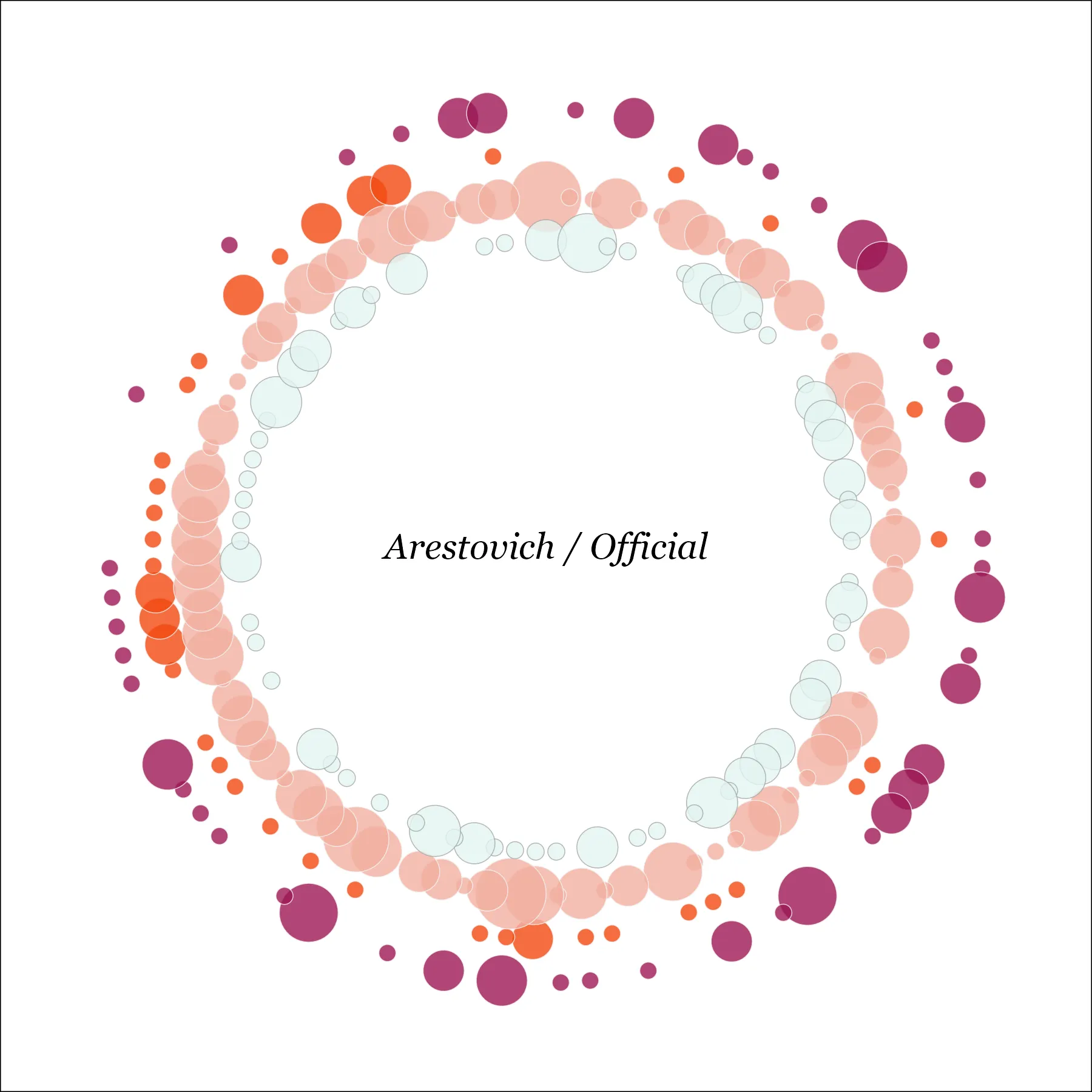

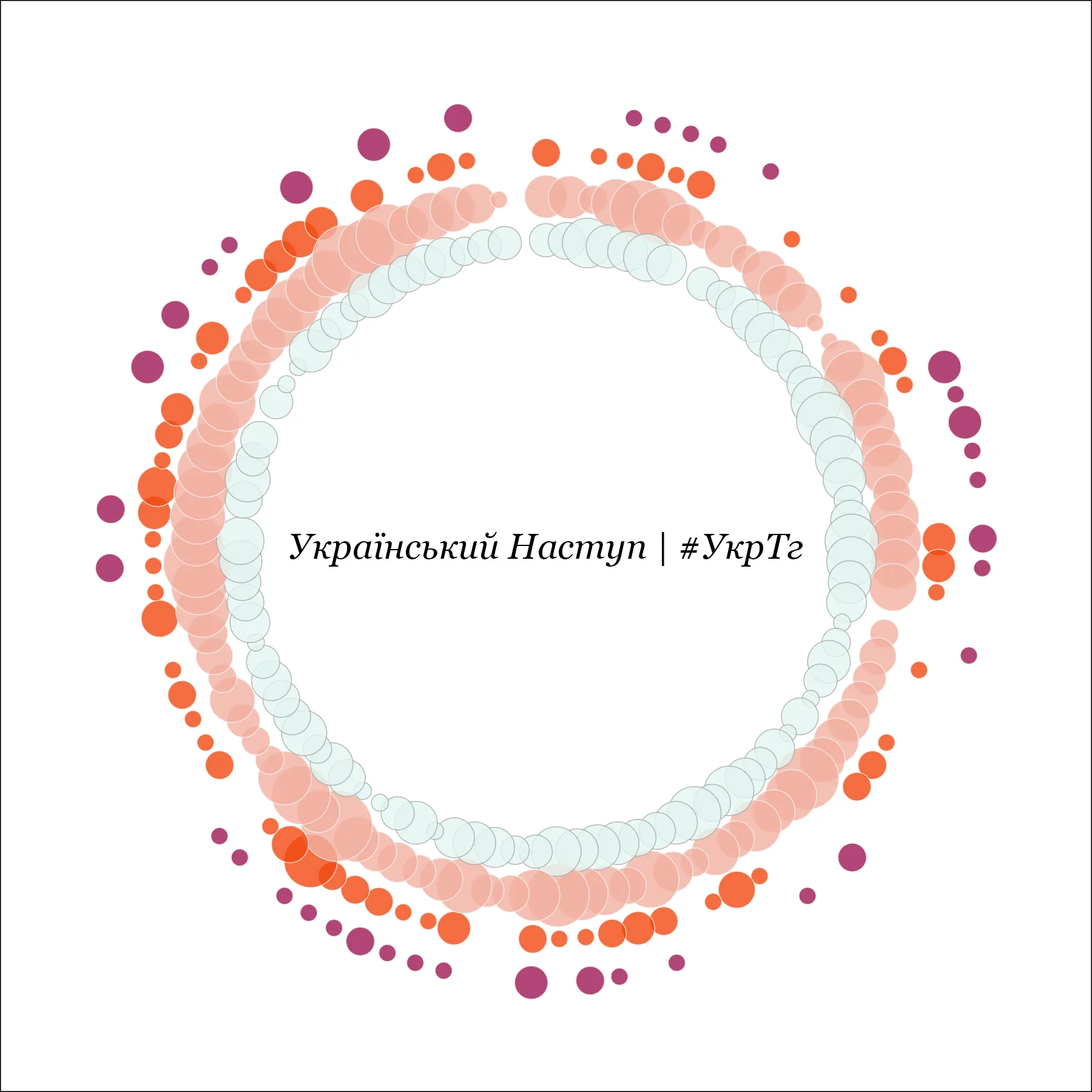

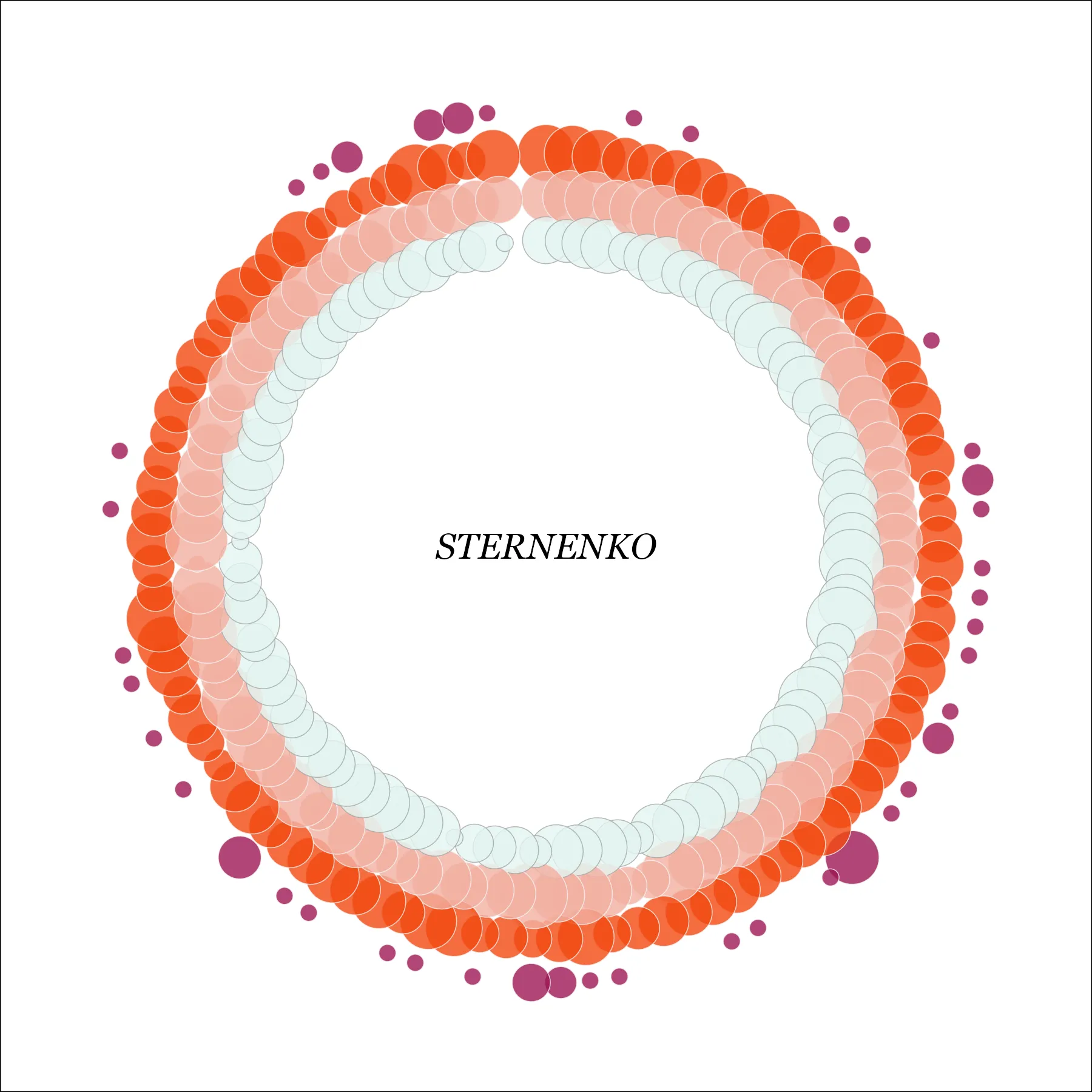

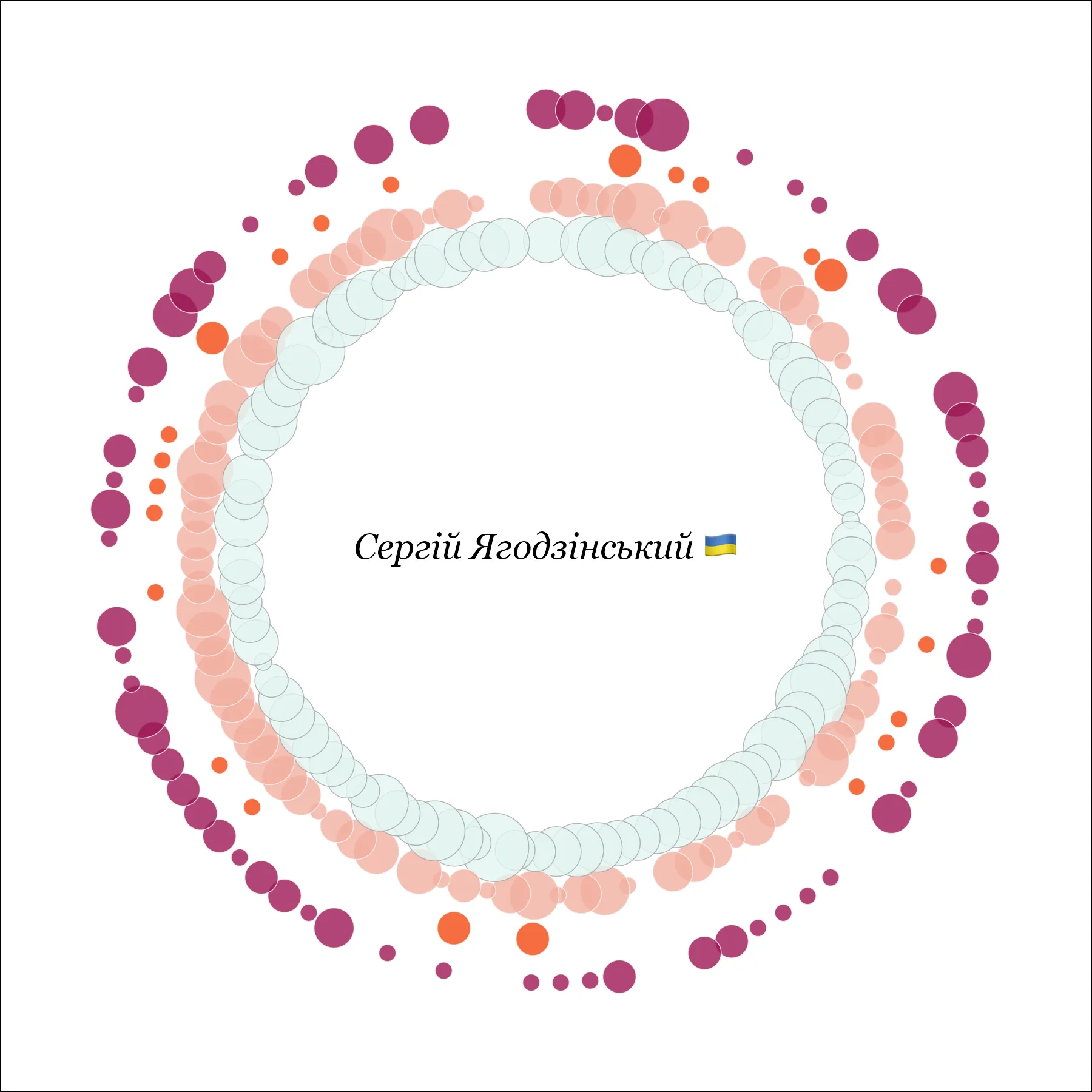

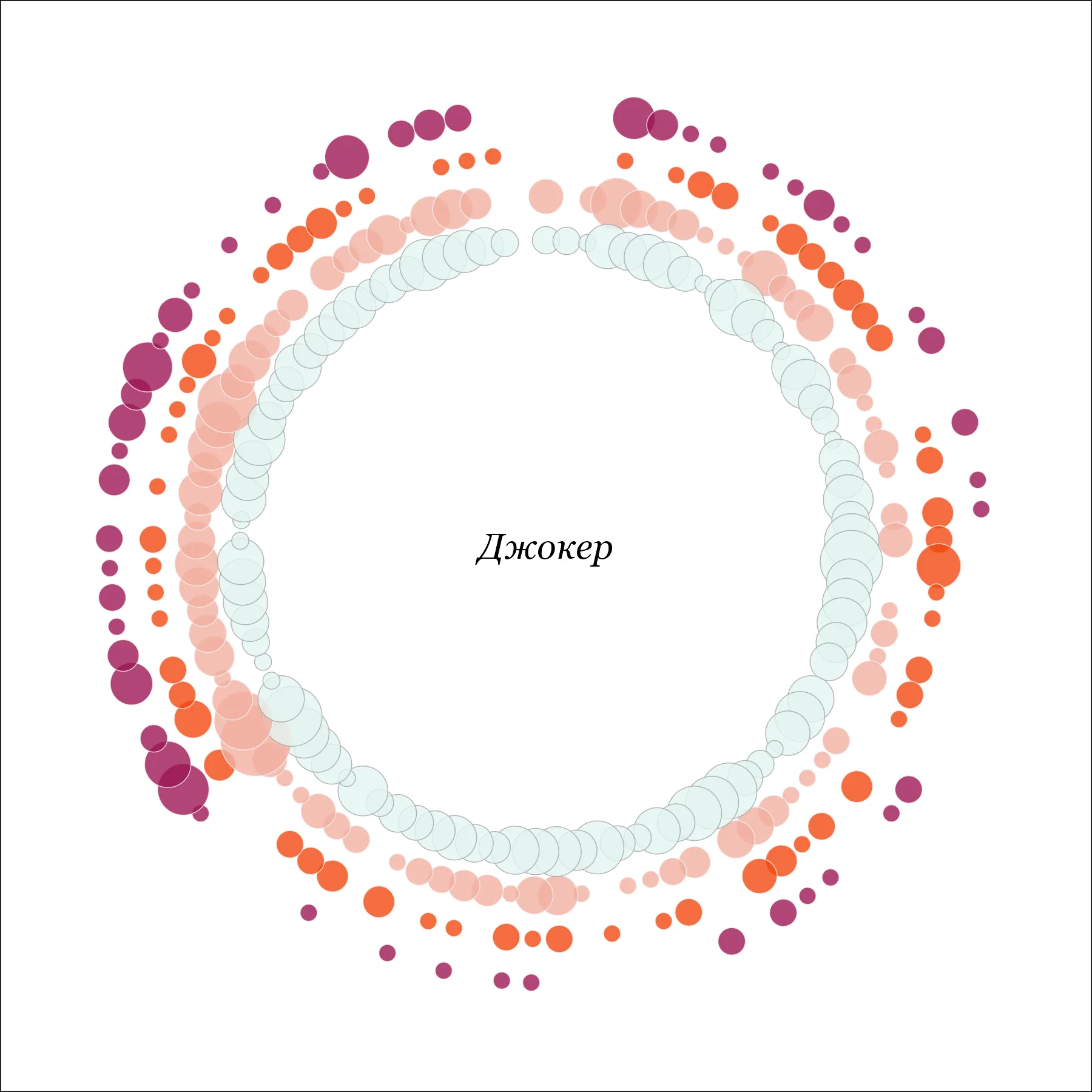

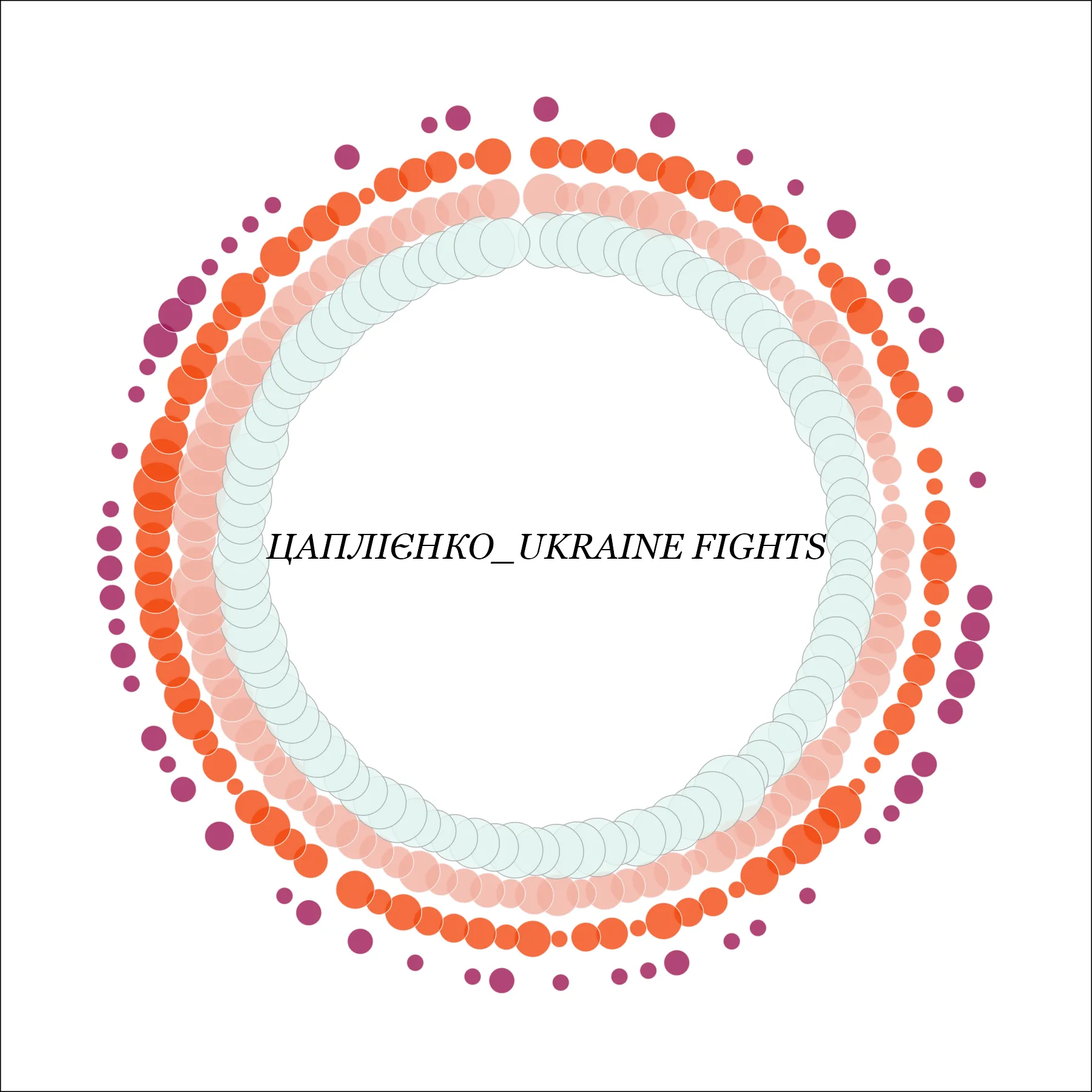

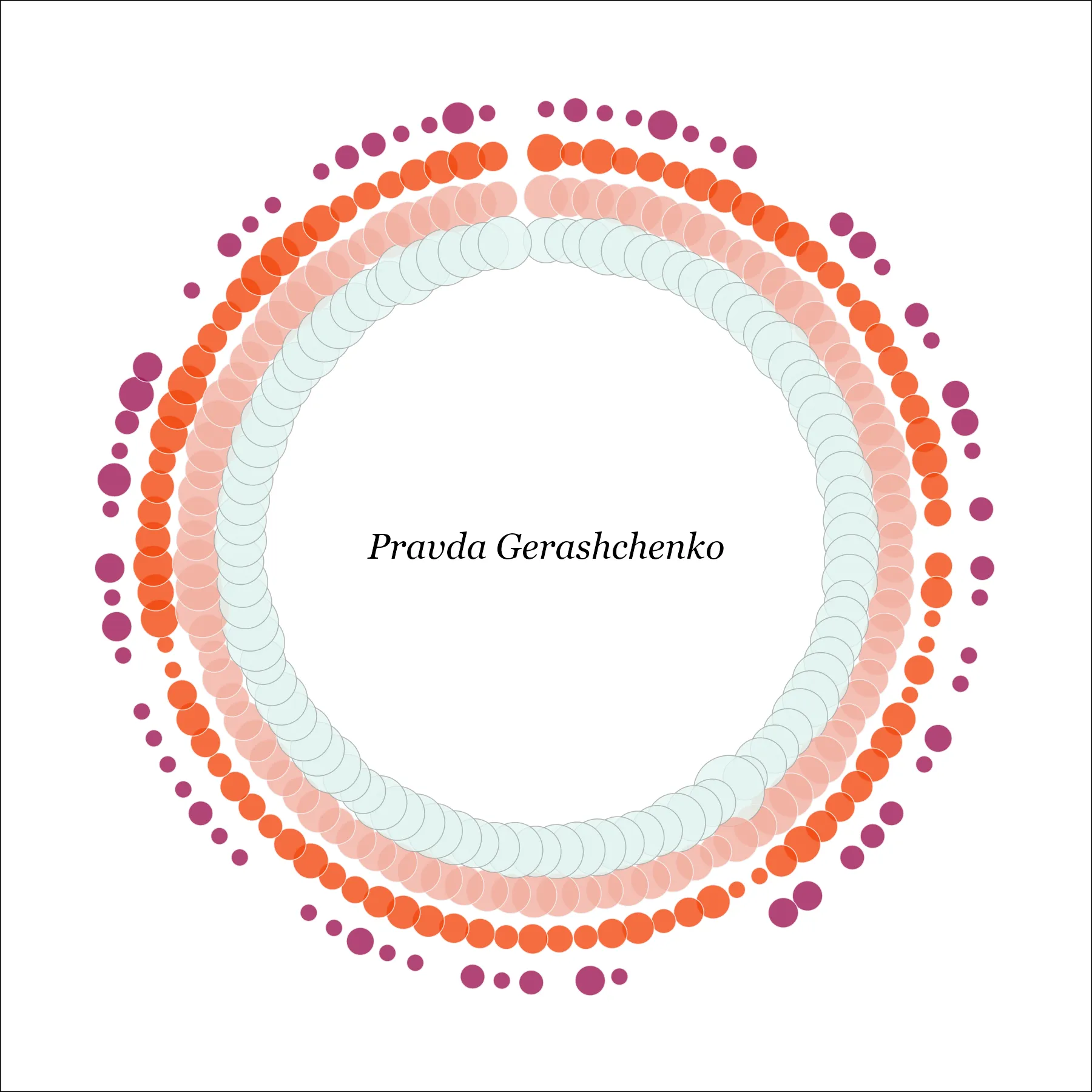

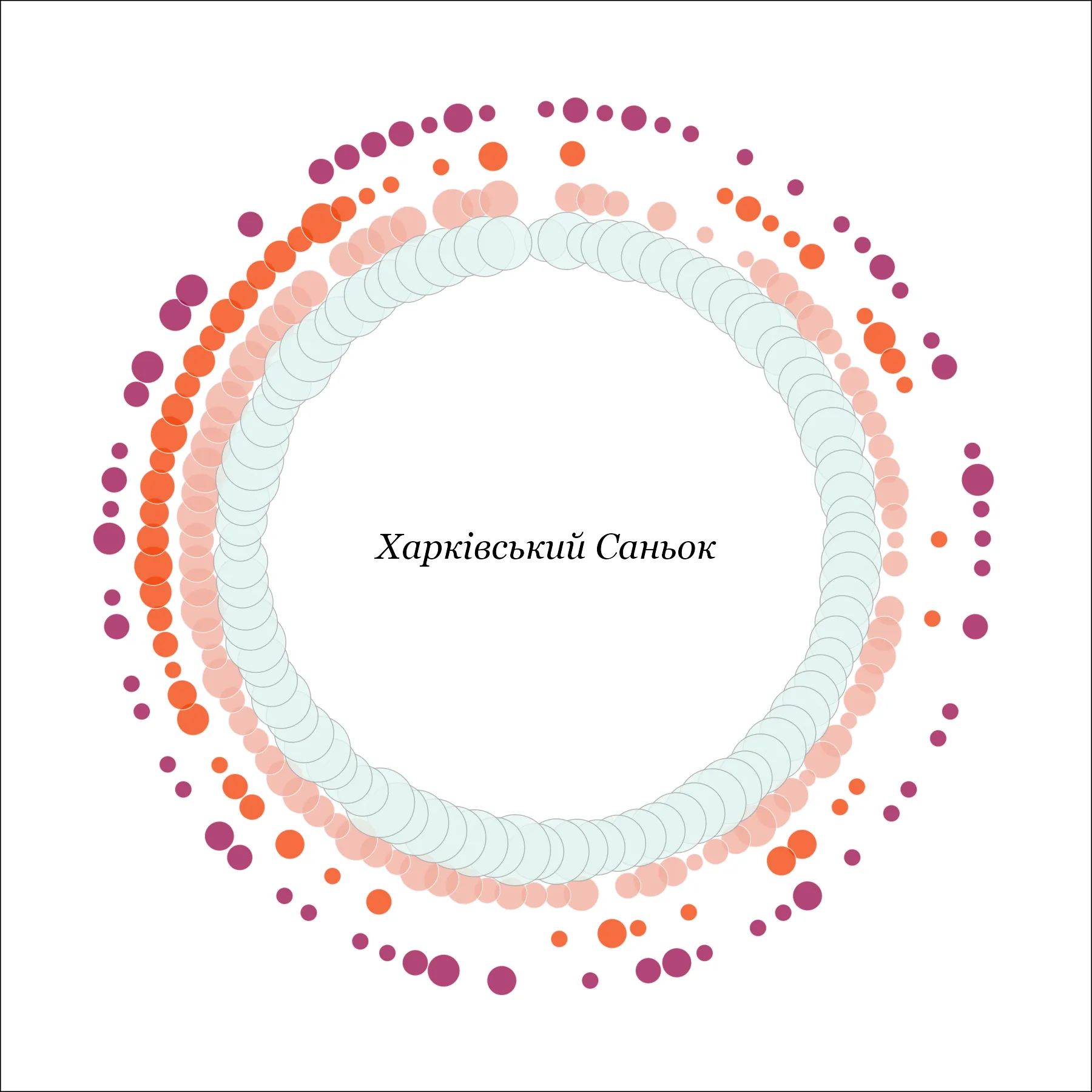

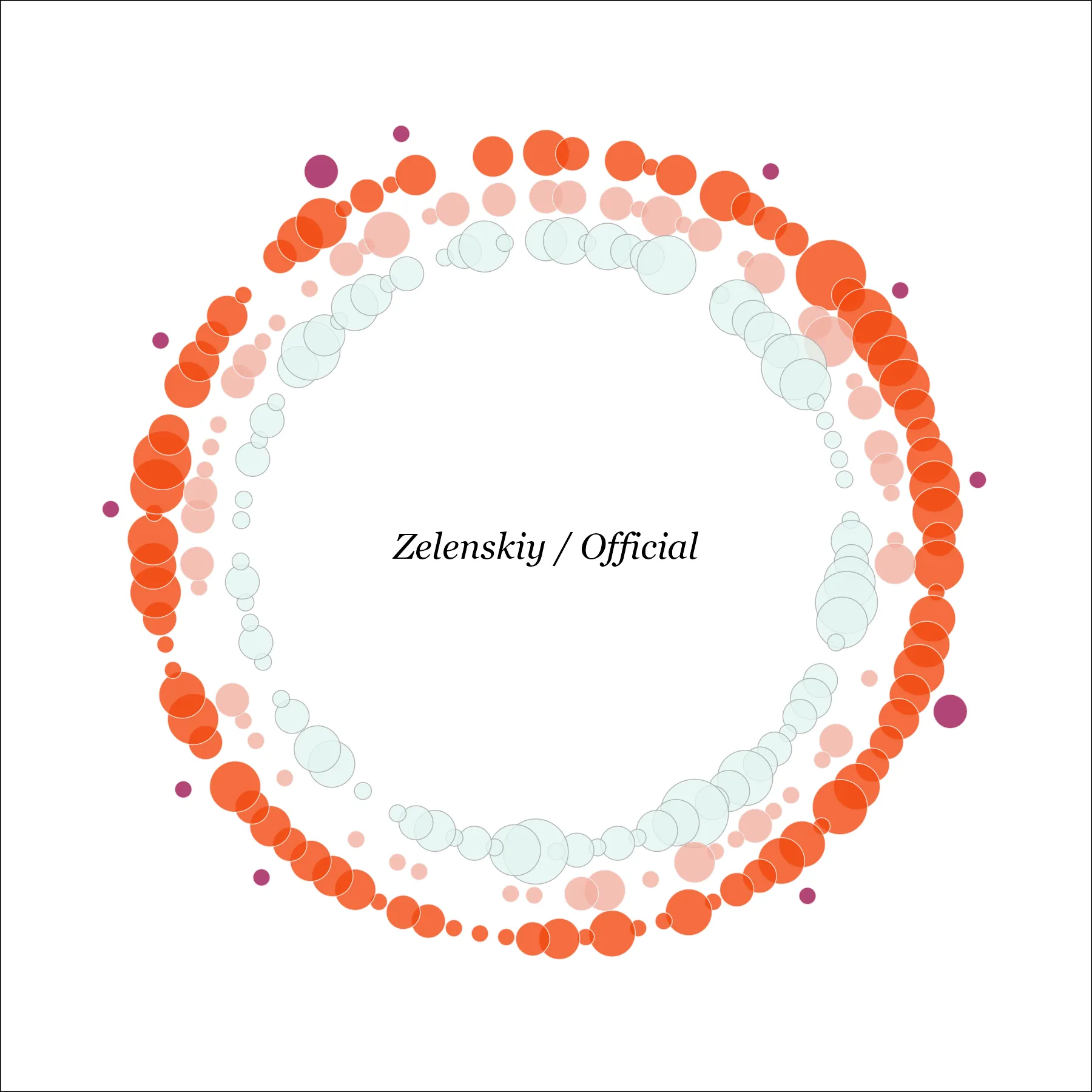

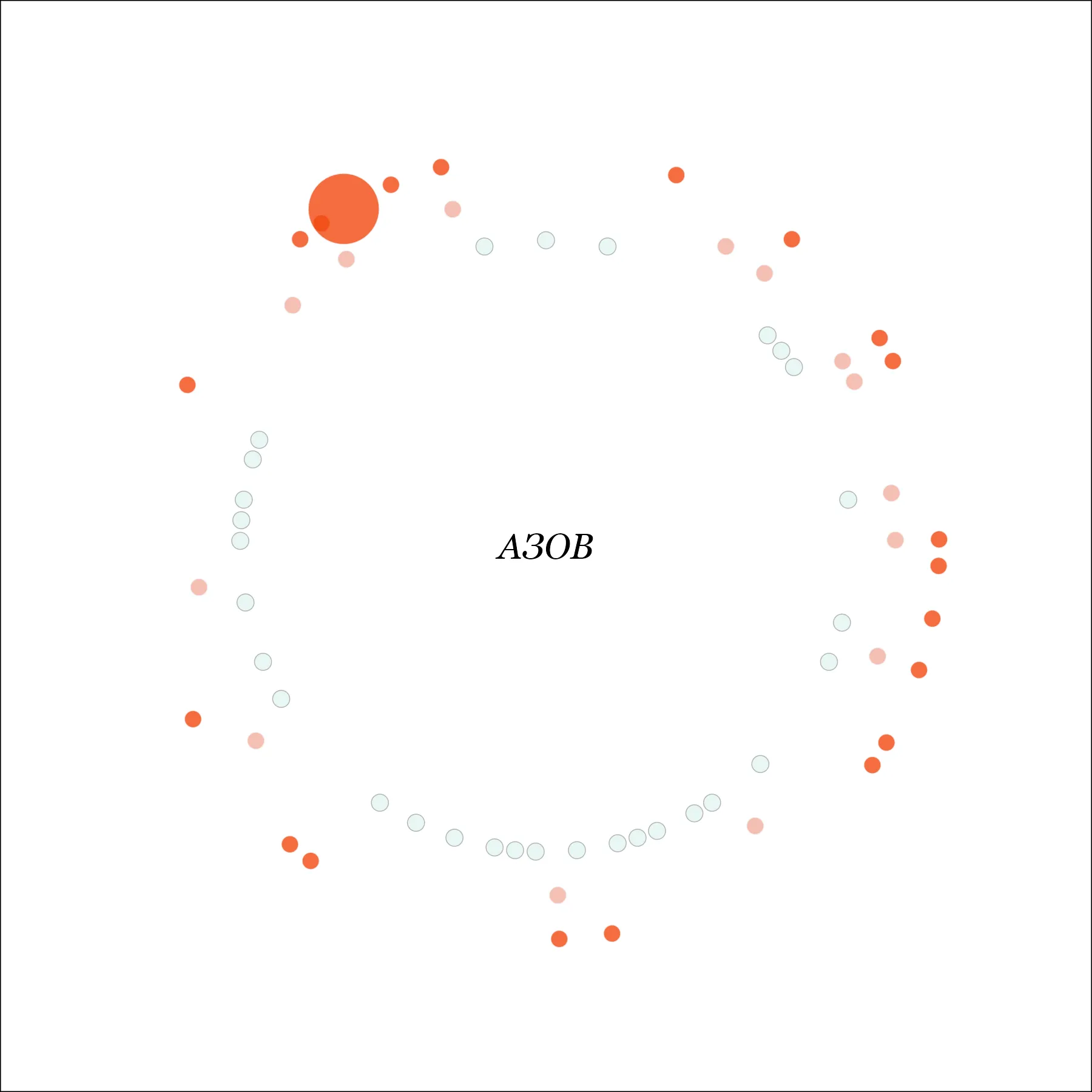

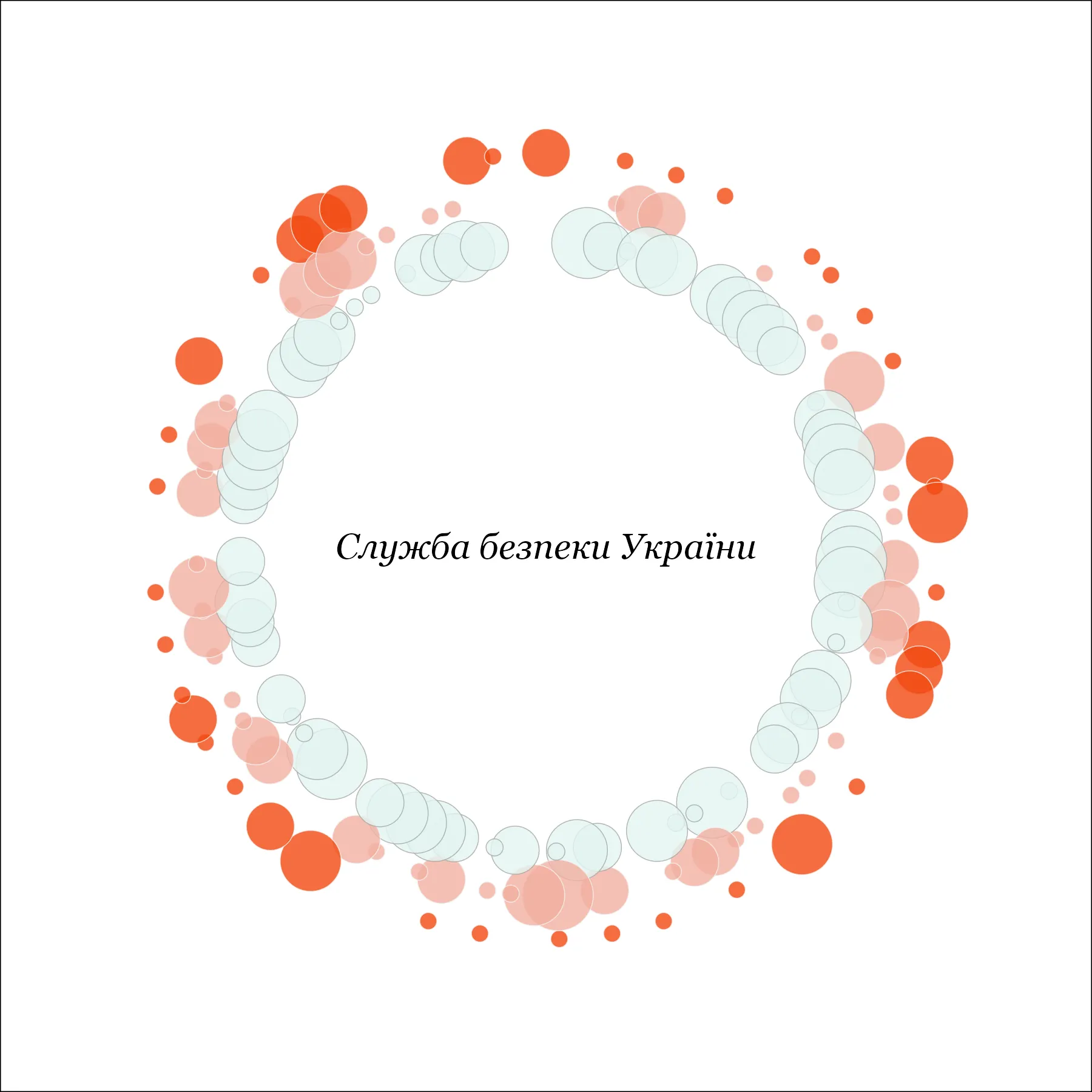

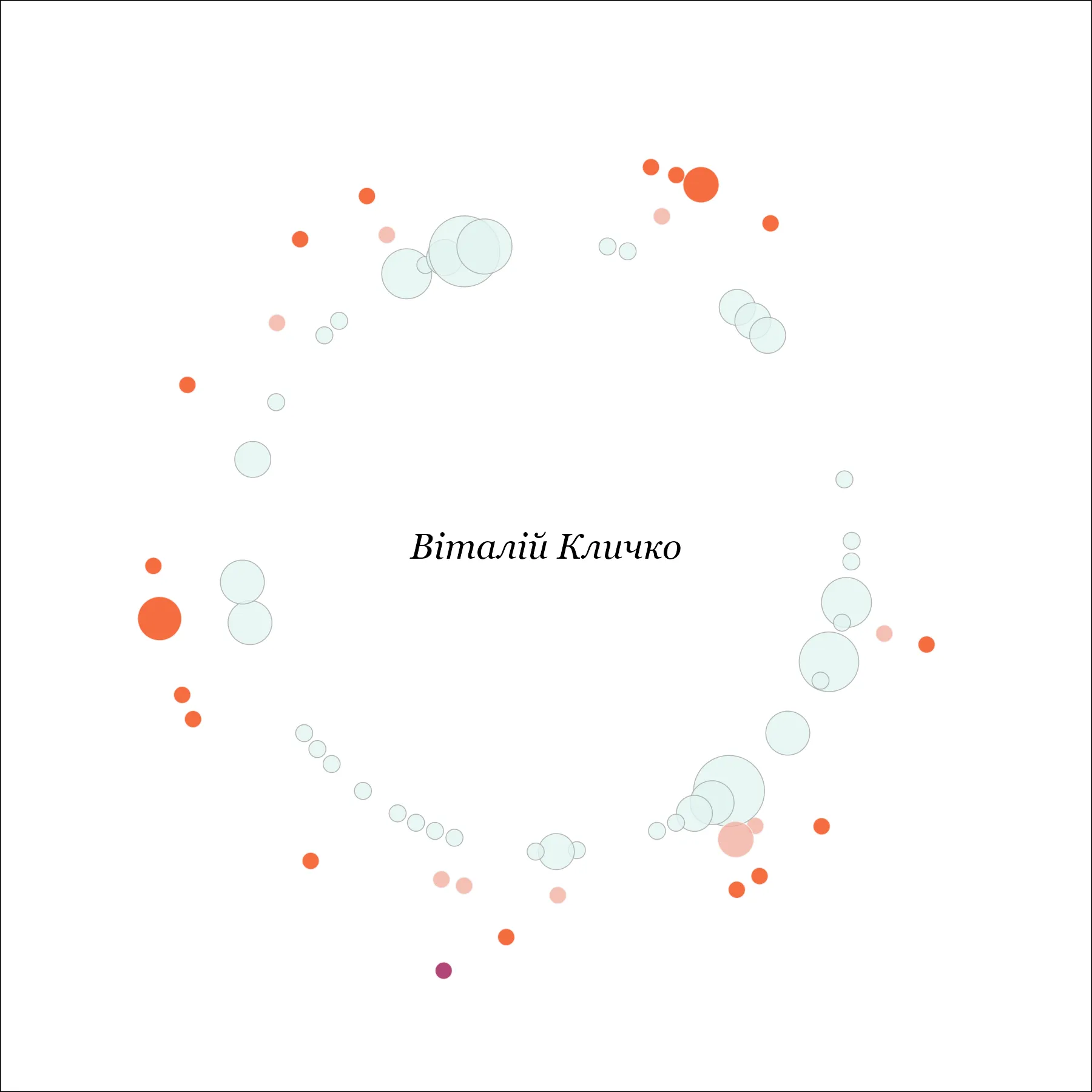

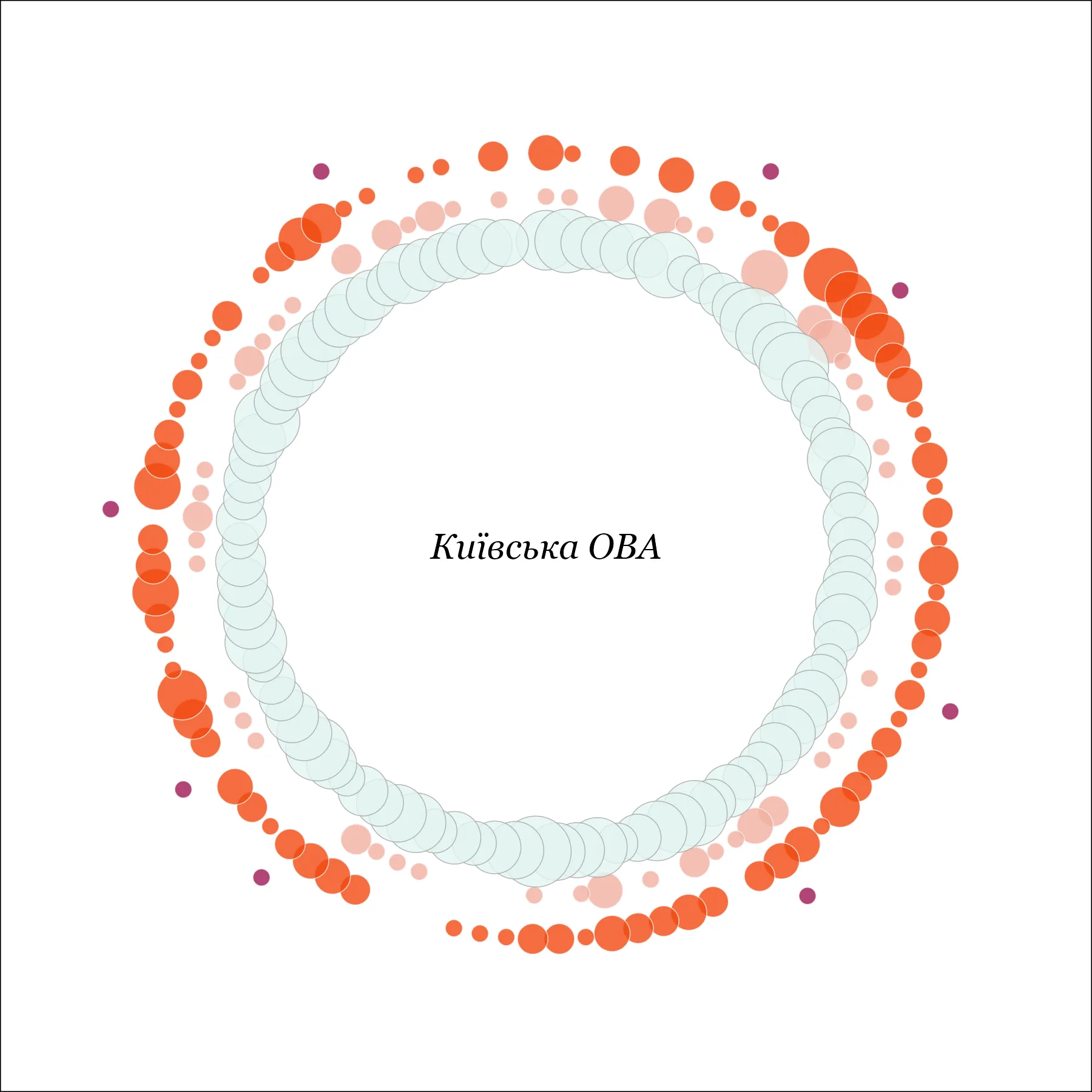

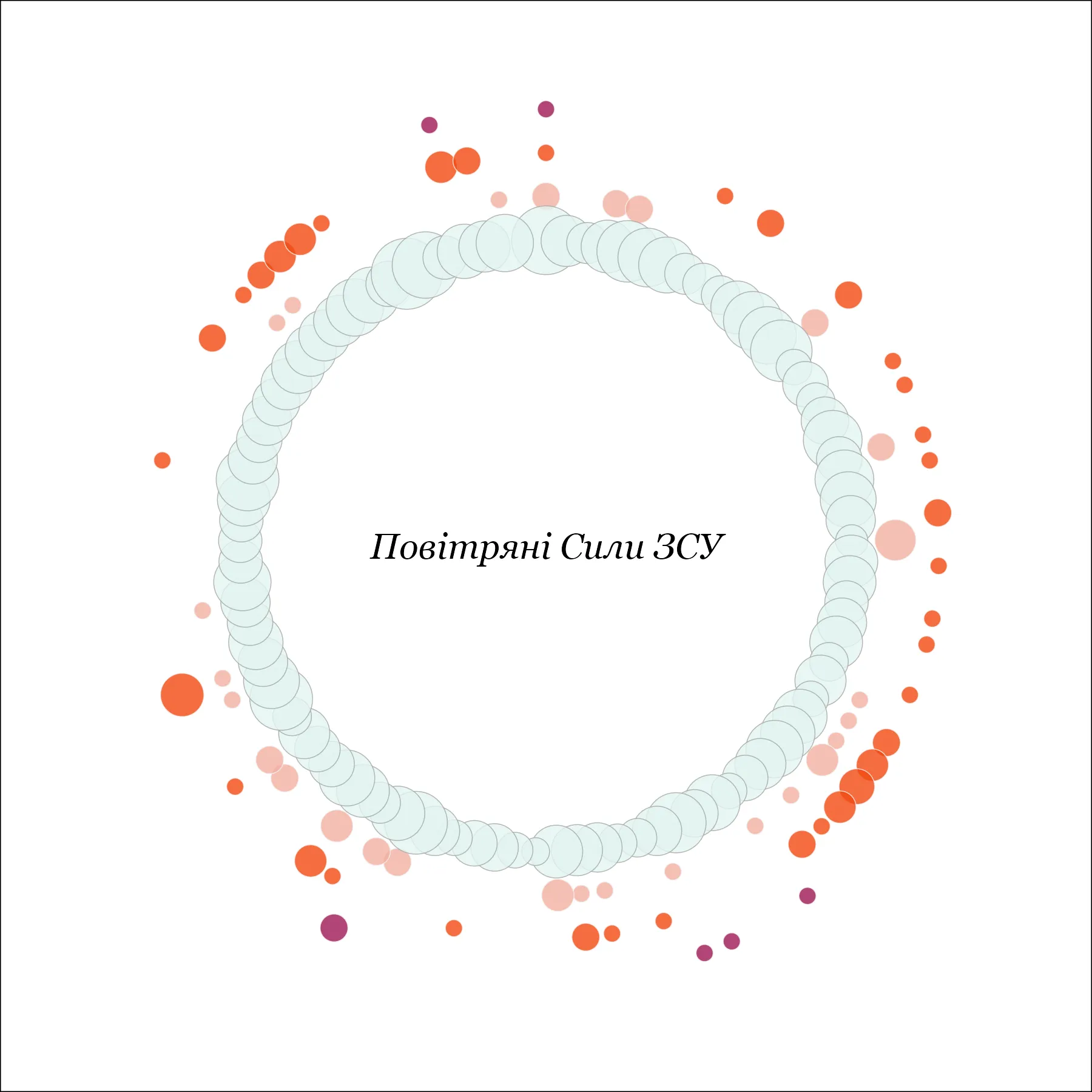

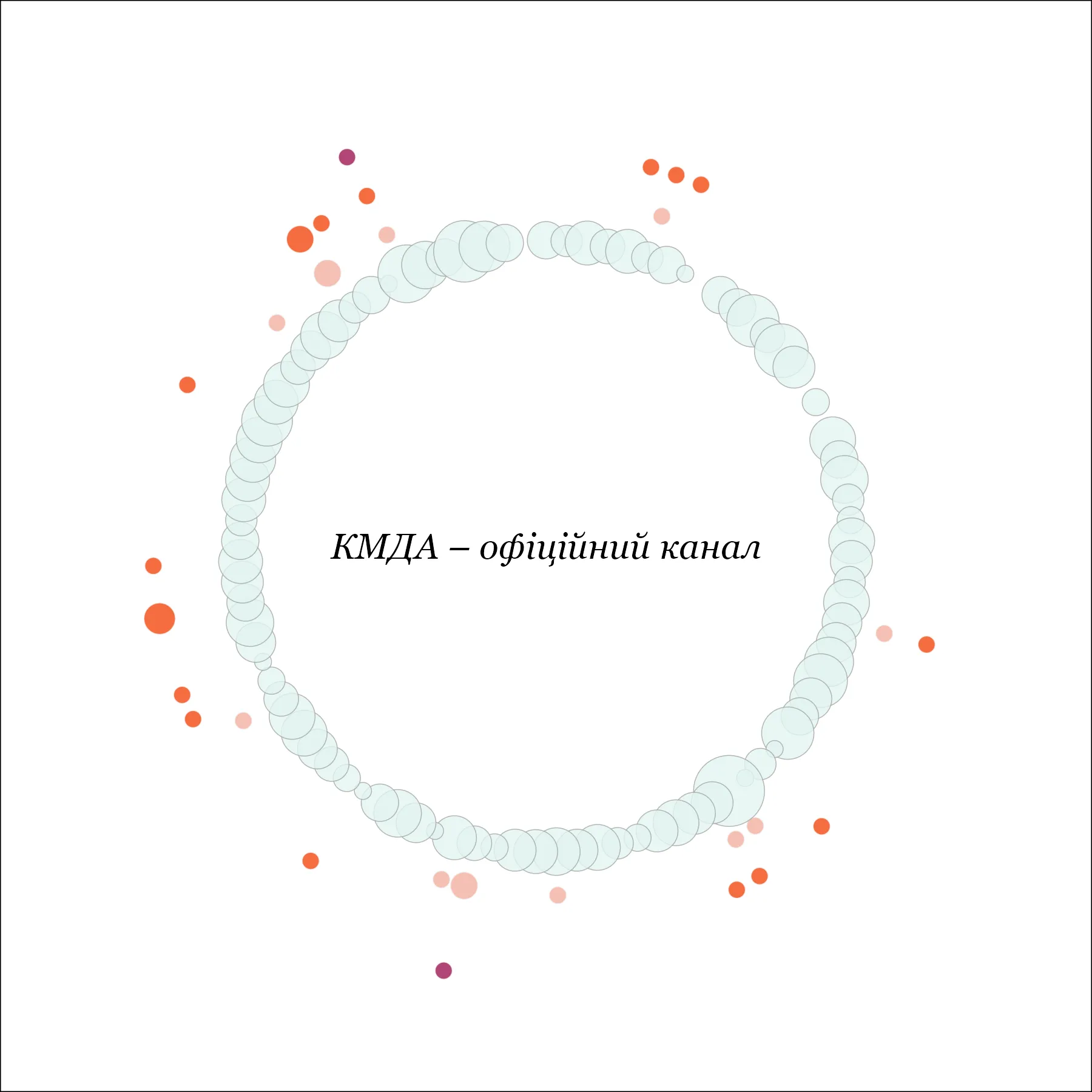

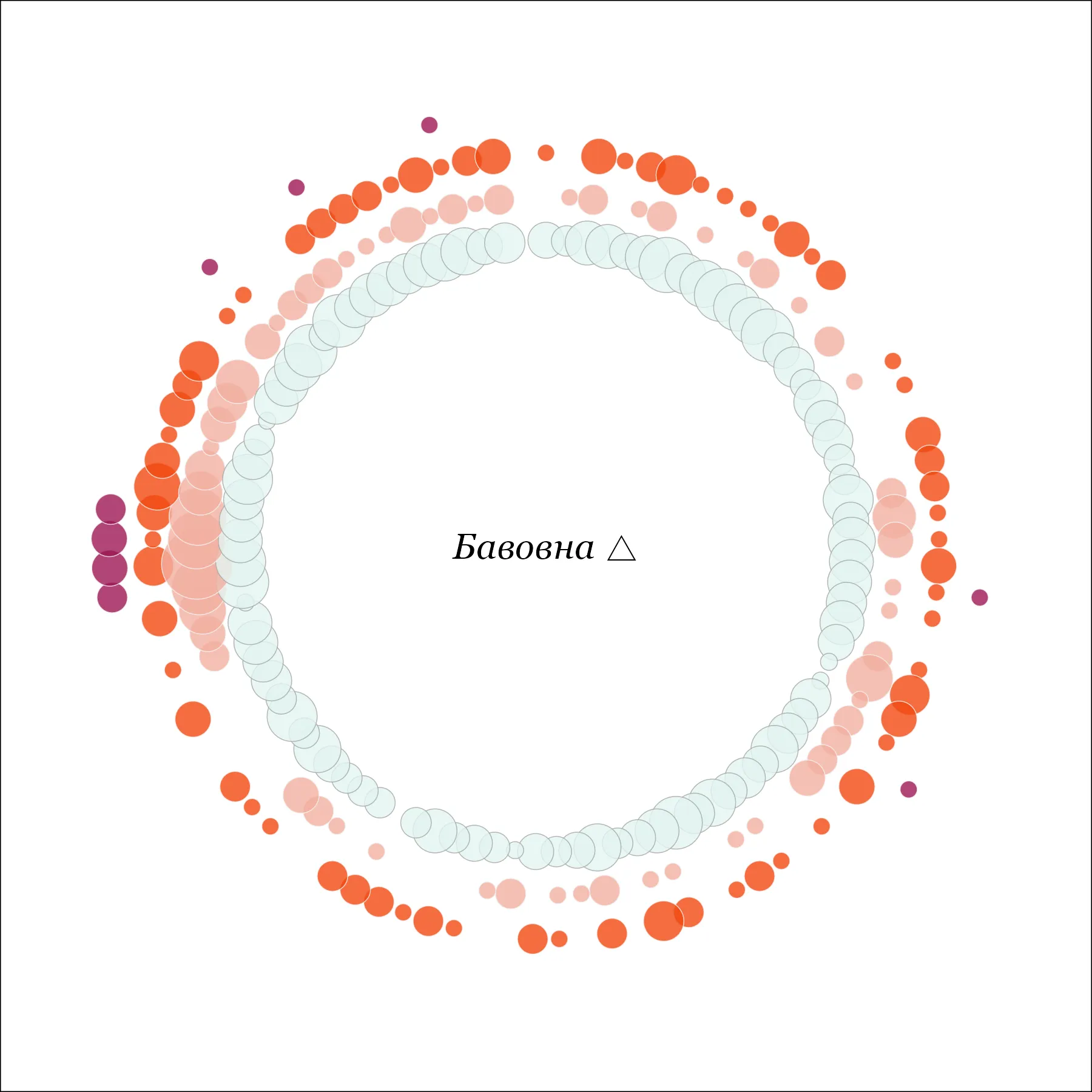

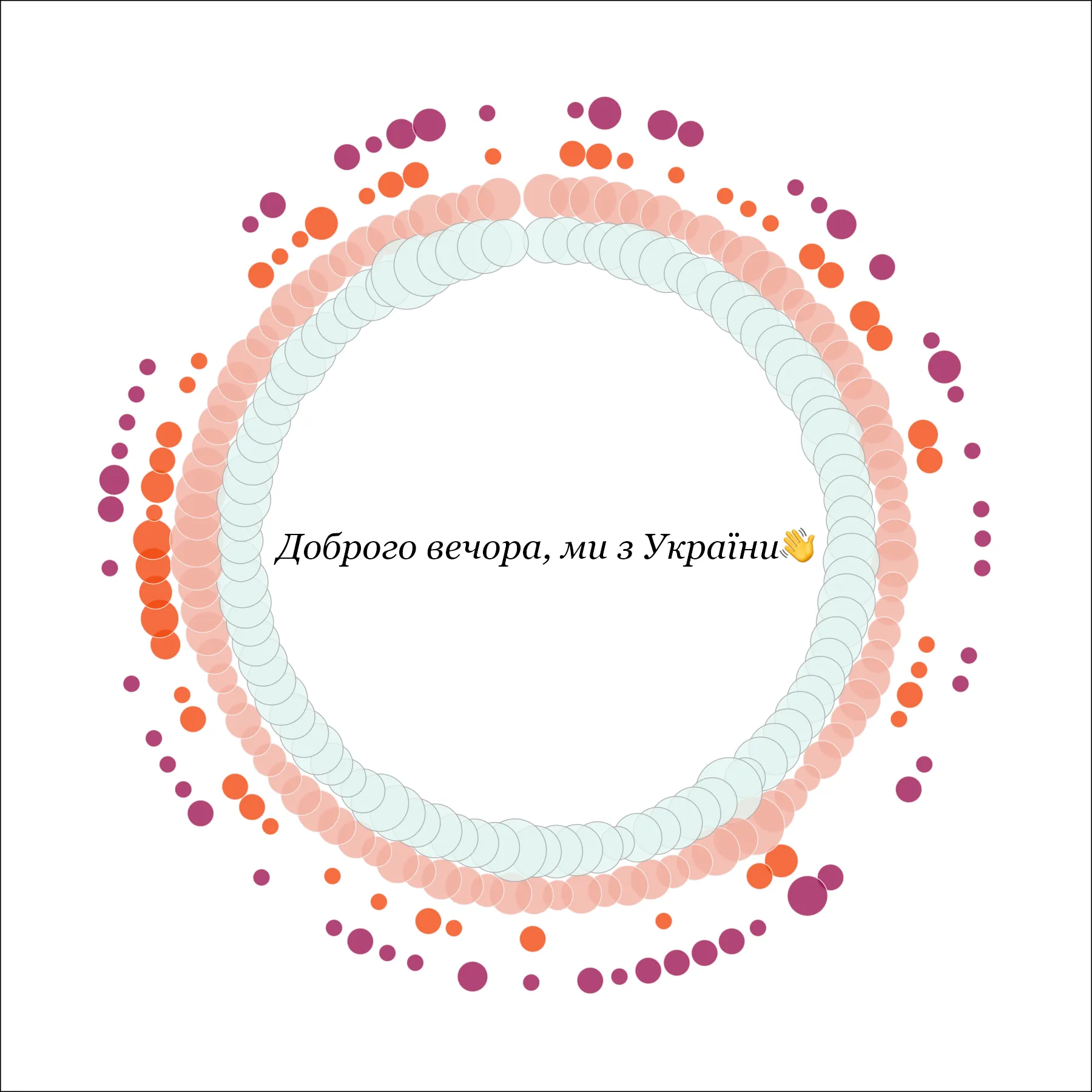

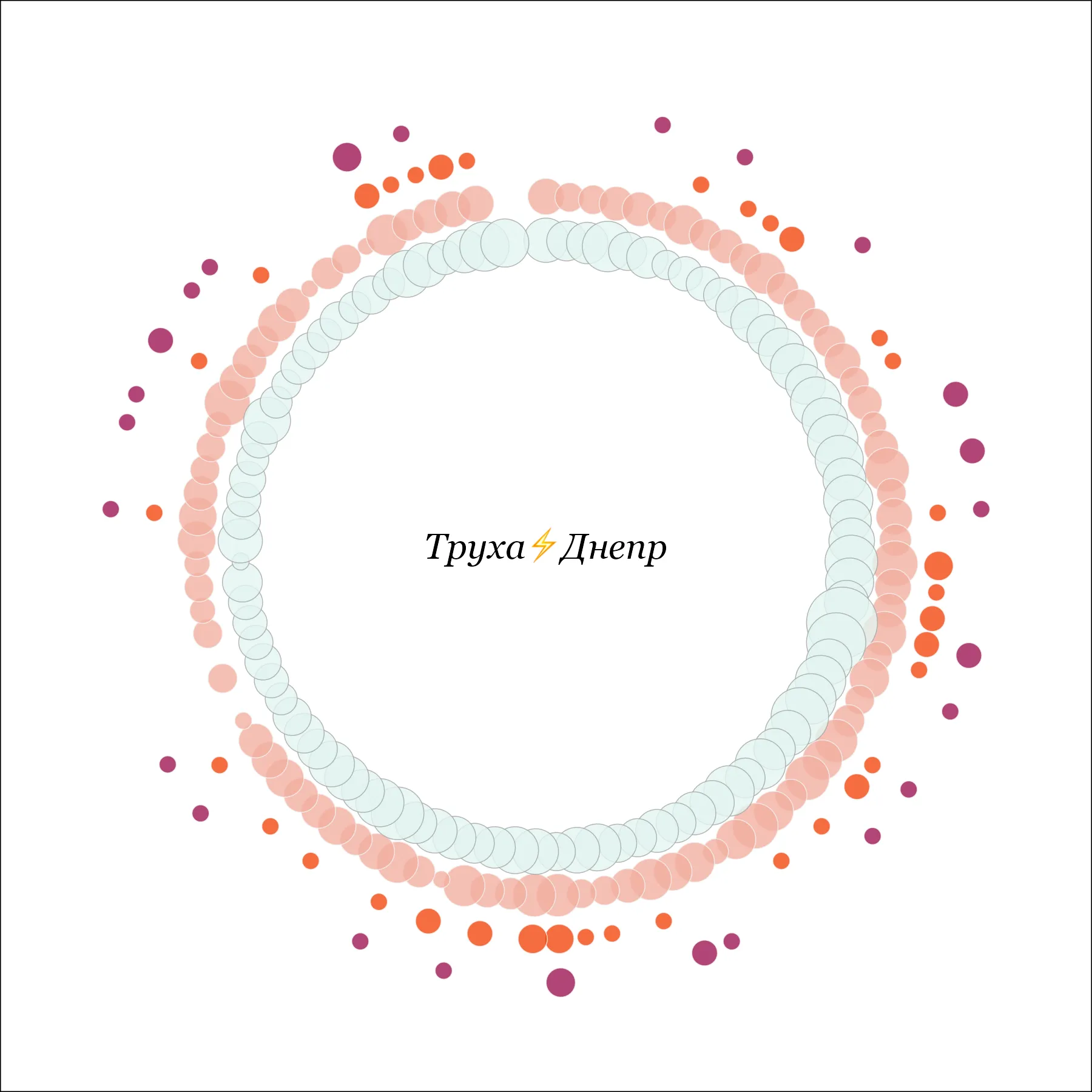

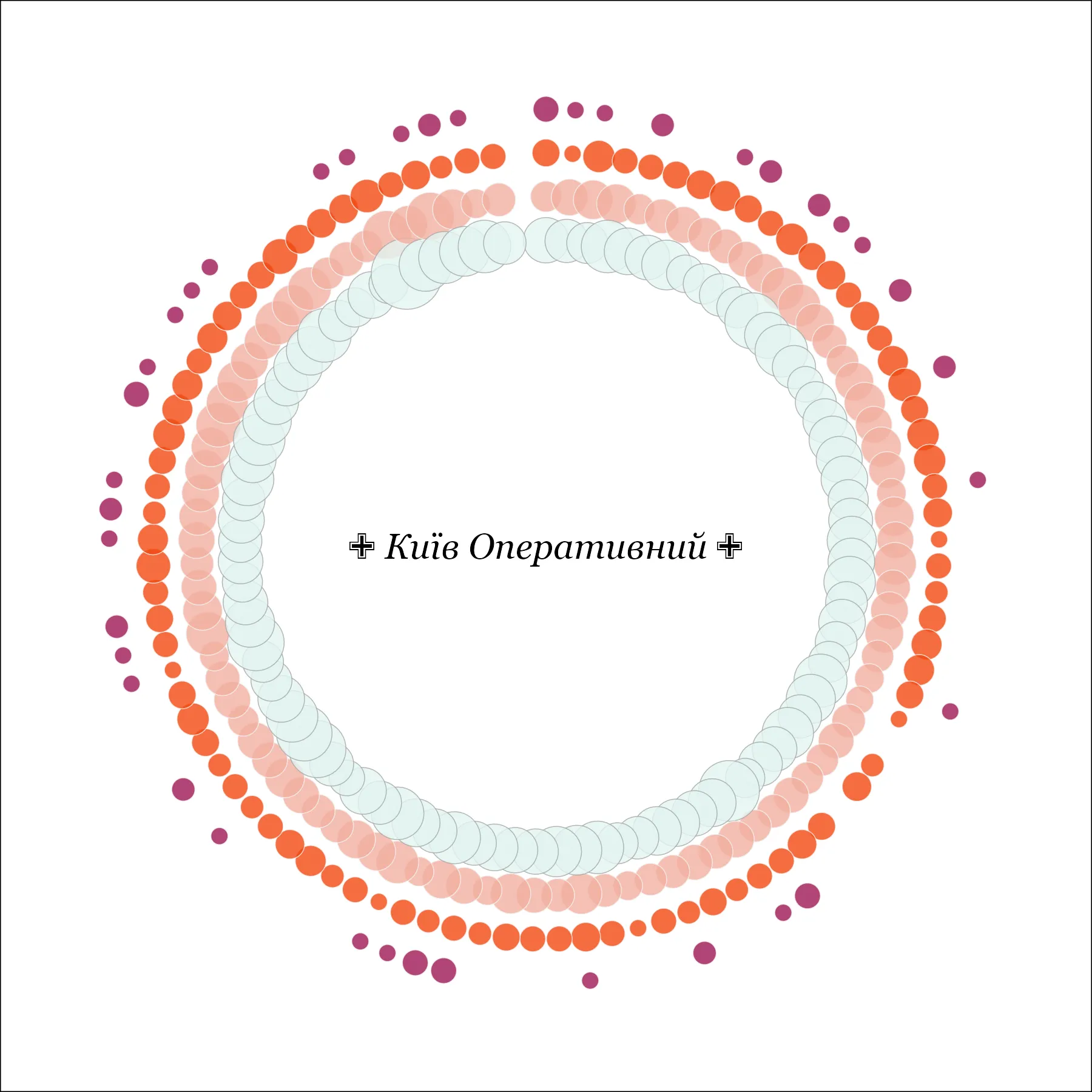

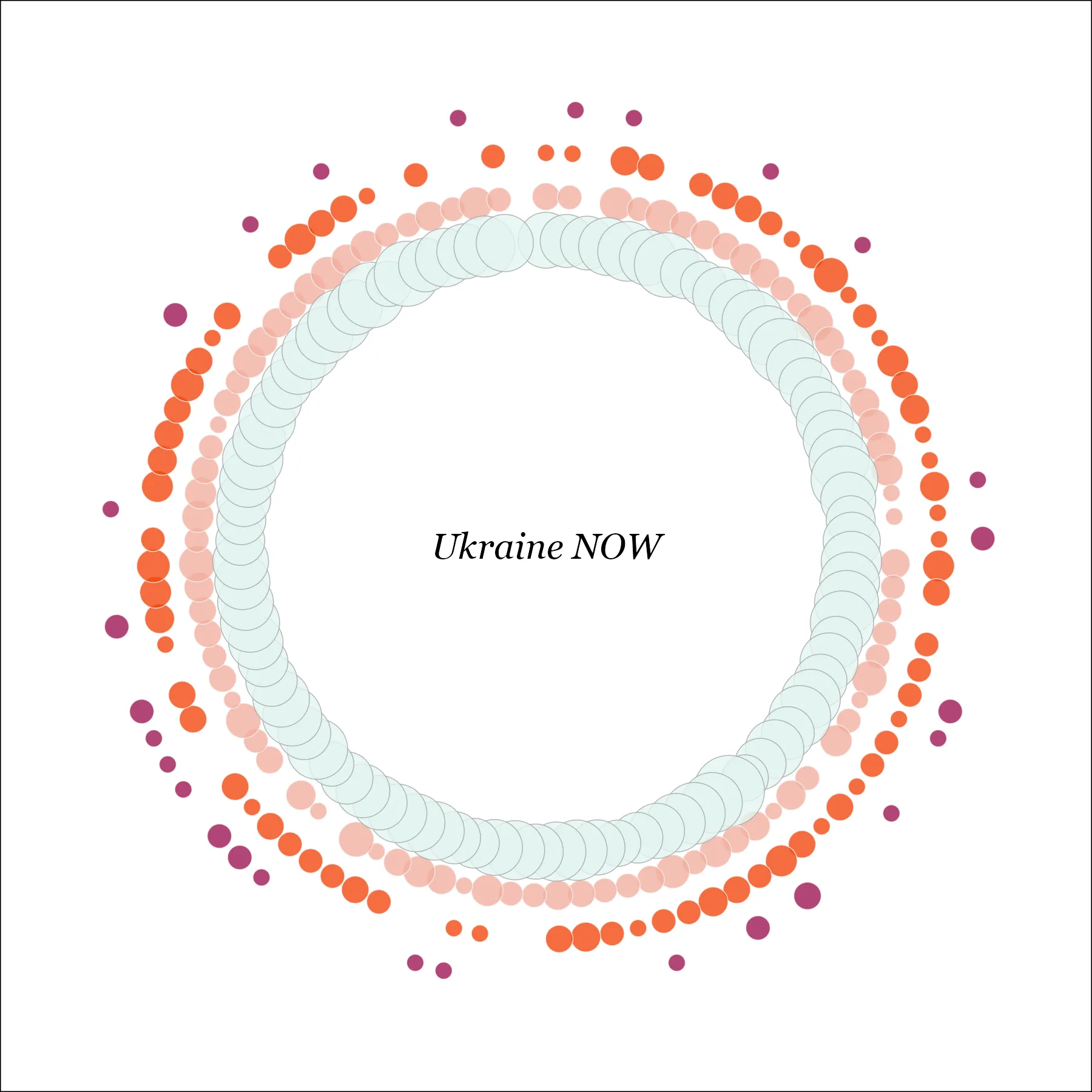

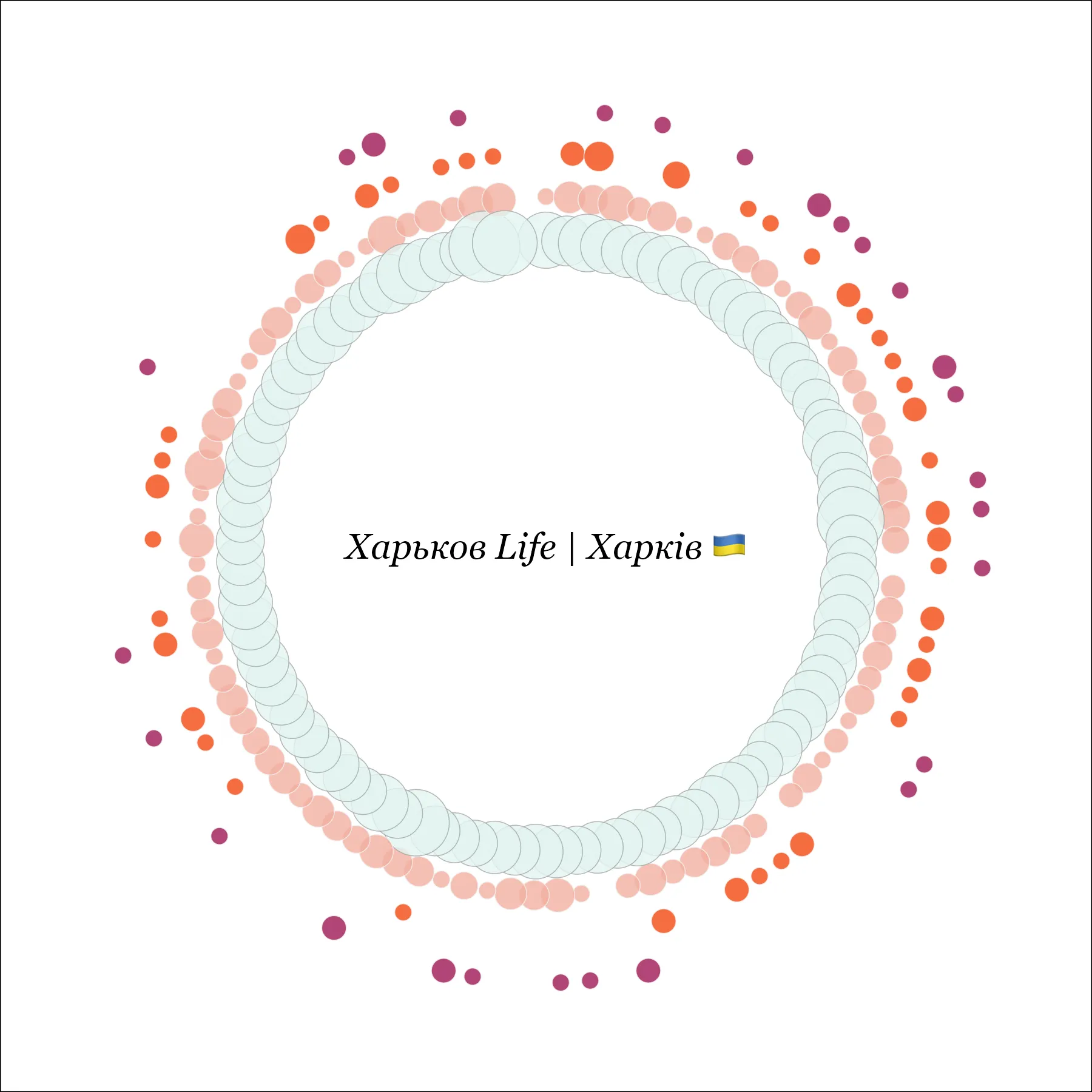

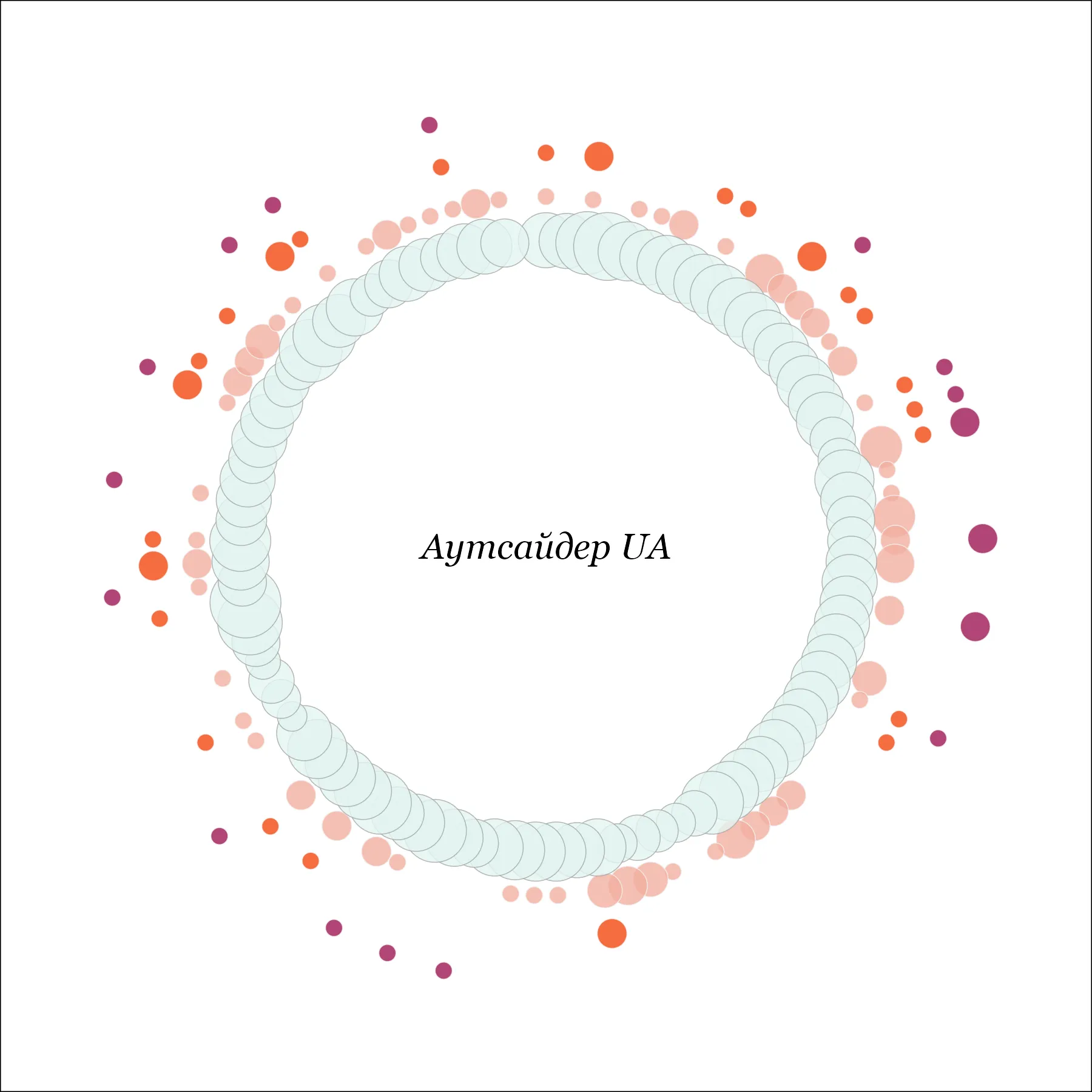

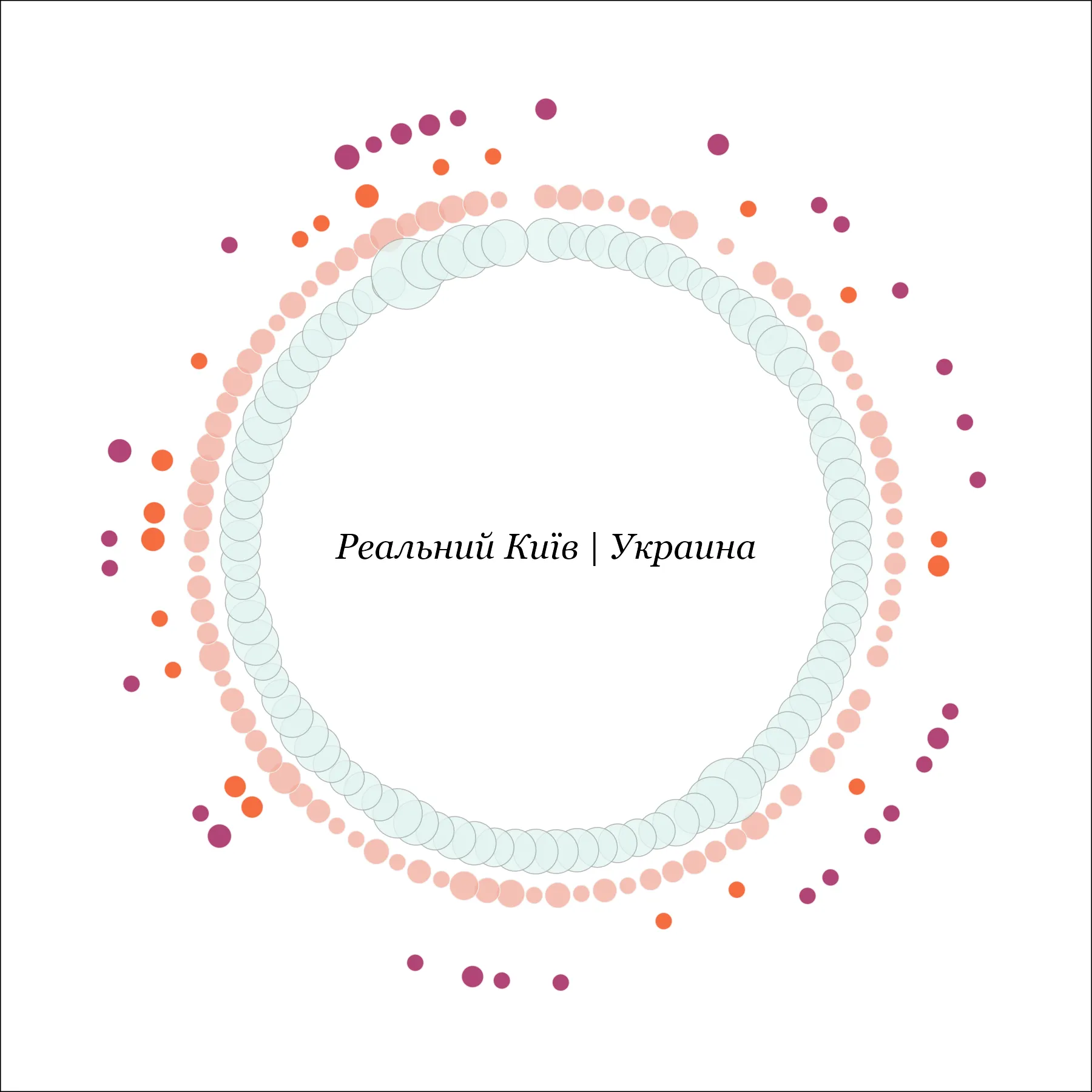

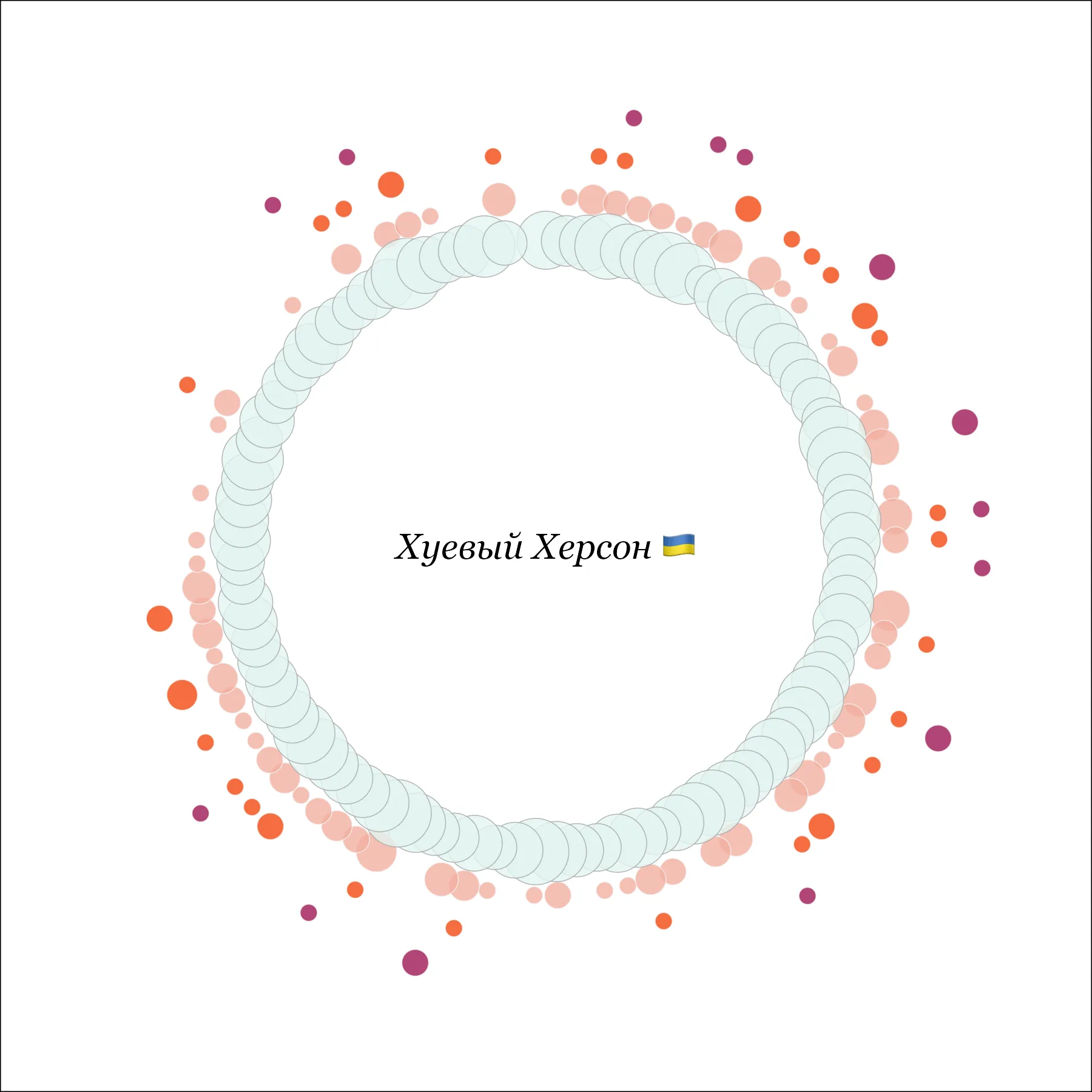

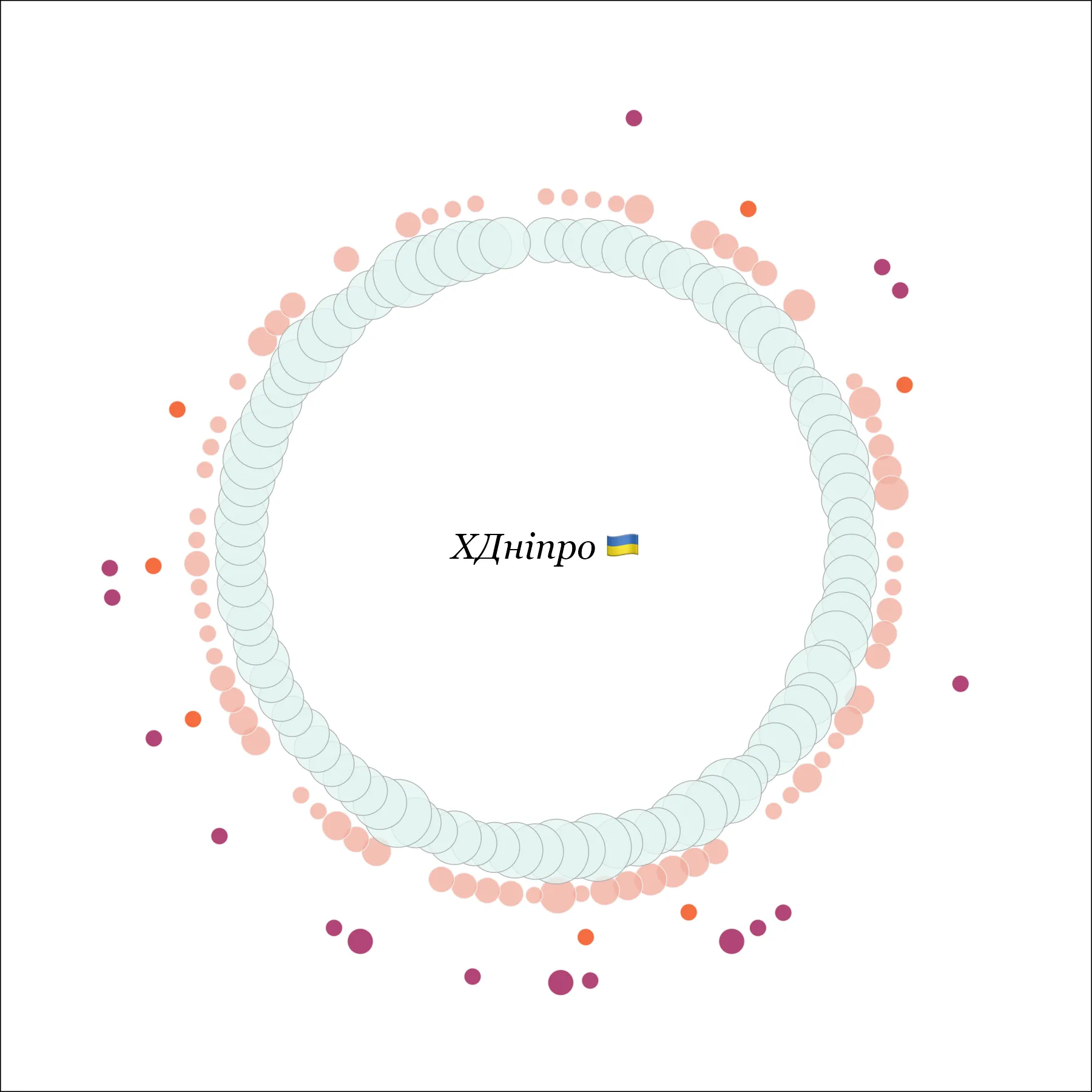

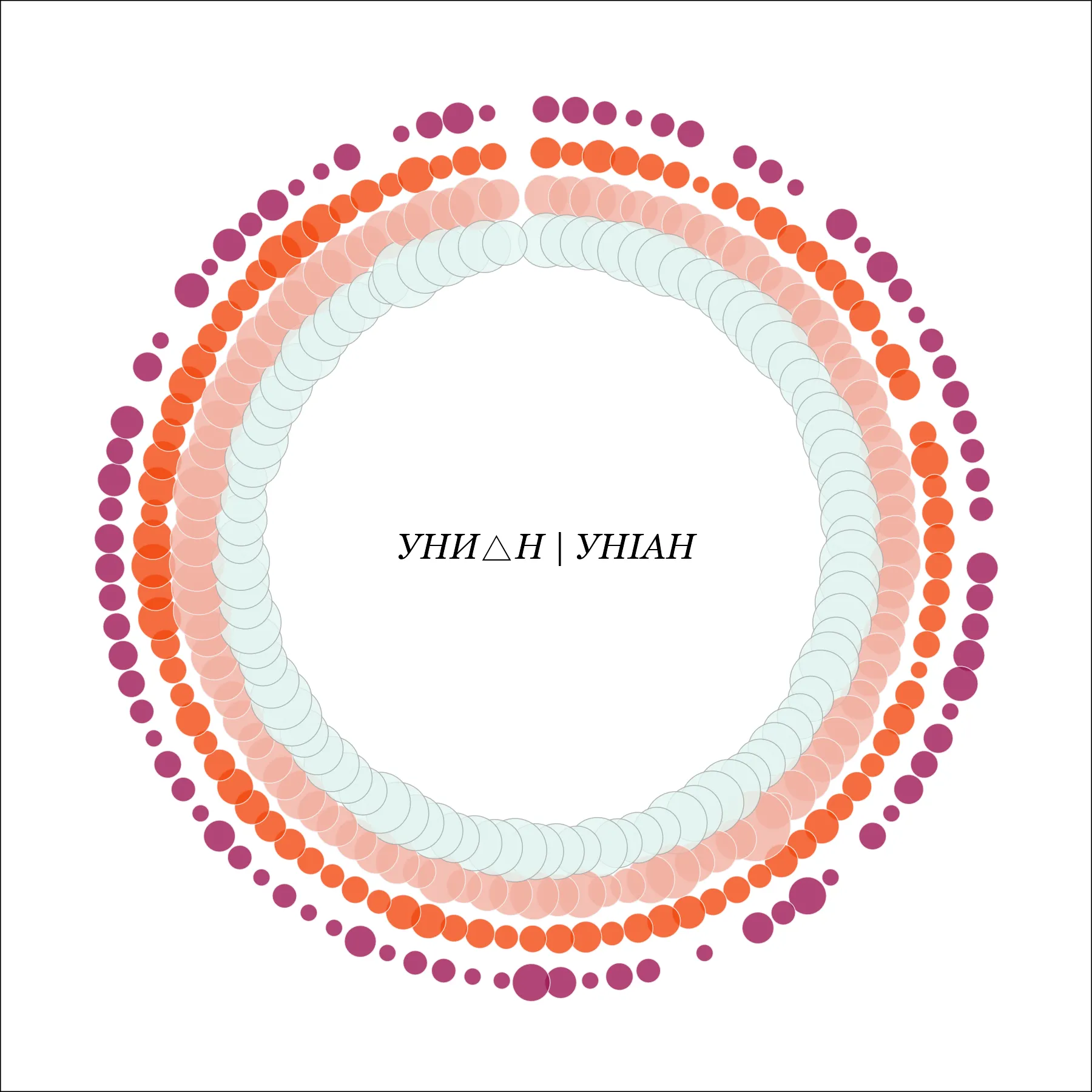

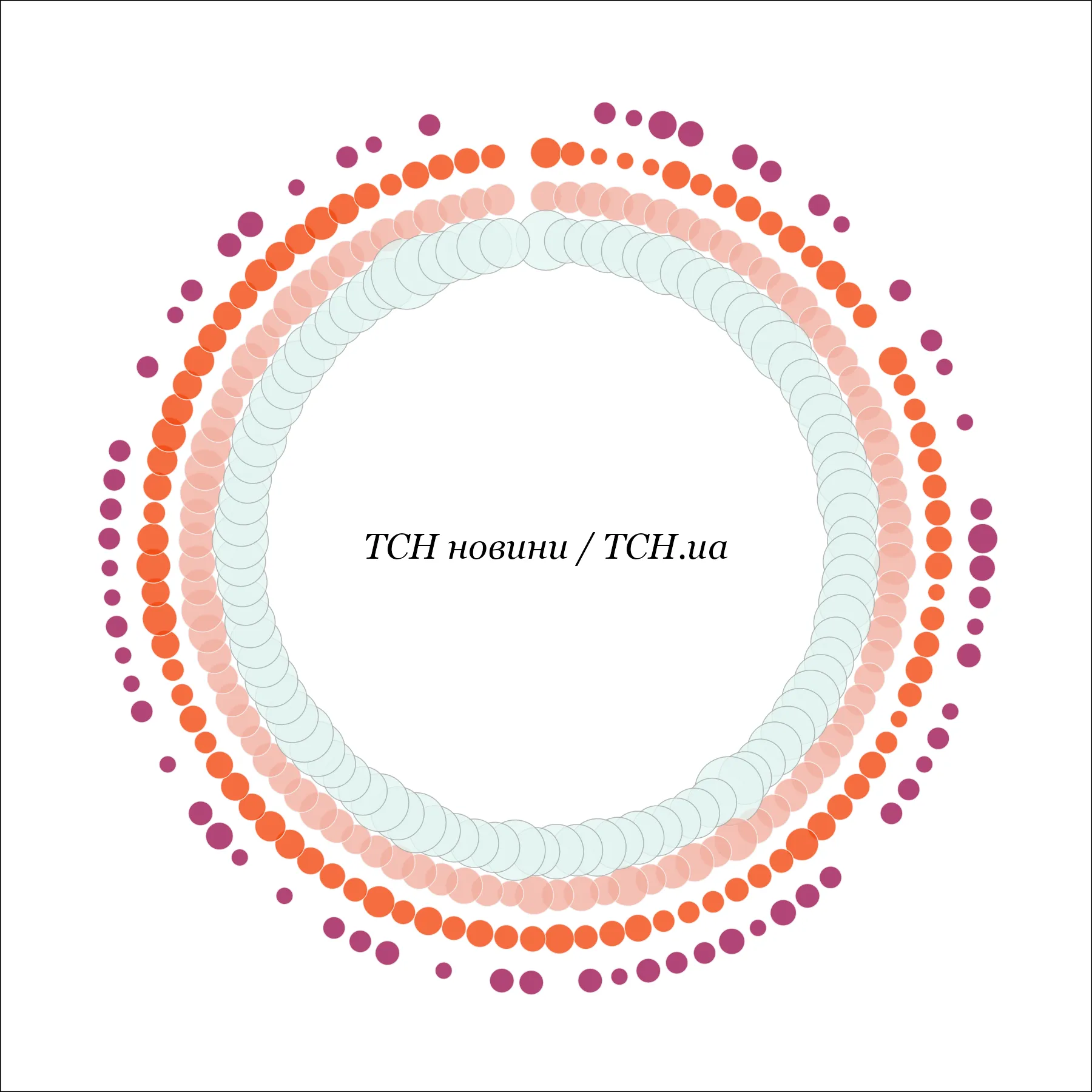

Below, we show these combined techniques and the loaded language on graphs with several circles of dots. On the other graphs, we calculate the percentage of manipulative language in all ten techniques. Each graph states what was calculated and how it was calculated.

If you think these techniques are used only by Russian propagandists, we have to disappoint you. Our algorithm analyzed the summer news feeds of the most popular Telegram channels in Ukraine, and it seems that we encounter manipulations many times every day.

Let's take Trukha (ТРУХА), one of the most popular news Telegram channels in Ukraine. In the summer, the channel published an average of 37 posts daily—3,400 over the three summer months.

And almost half of them (~45%) contained at least one type of manipulation.

Most often, it was loaded/emotional language.

The Russian telegram channel Rezident, which keeps claiming to be Ukrainian, manipulated almost all the time: 90% of its almost 1,000 summer posts have used at least one manipulation technique.

It seems that sowing fear and doubt is the channel's main objective. 67% of its publications were aimed at making Ukrainians afraid or disappointed, whether in the government, the Defense Forces, or with the help of international partners.

Suppose you think that a wartime news feed is impossible without manipulation. In that case, Suspilne's (Public Broadcasting Company of Ukraine) Telegram channel, with less than 2% of publications that our language model identified as manipulative, proves that this is not the case.

However, this level of neutrality is typical for a small share of Telegram channels.

Manipulation rate across 10 parameters in different groups of channels

Hover over a point to see the name of the channel. In this graphic, we took the posts that our model classified as manipulative. Then we determined their share of the total number of posts published by the channel.

Does this mean that the use of emotional vocabulary, which our model identifies as manipulative, is a priori bad? No, it does not.

Sometimes, it is quite justified (what's wrong with being happy about Olympic medals?).

Sometimes propagandists mimic a balanced media outlet and do not use manipulative language, such as the Politika Strany (Политика страны) channel.

Sometimes the use of such vocabulary is justified by the genre and subject matter of the channel (imagine if blogger Kazansky starts writing dryly and without emotion one day, and the 3rd Assault Brigade stops emotionally describing how it is destroying the occupiers).

Sometimes, outright propagandists mimic a balanced media outlet and do not use manipulative vocabulary, such as the Politika Strany channel (the channel of the pro-Russian media outlet Strana.ua, which is blocked in Ukraine, manipulated in 20% of its posts in the summer). And this does not make the channel a more reliable source of information or less quoted by Russian media.

But is it worth relying on emotional bloggers or manipulative news aggregators to get news and learn about the most important things on a daily basis?

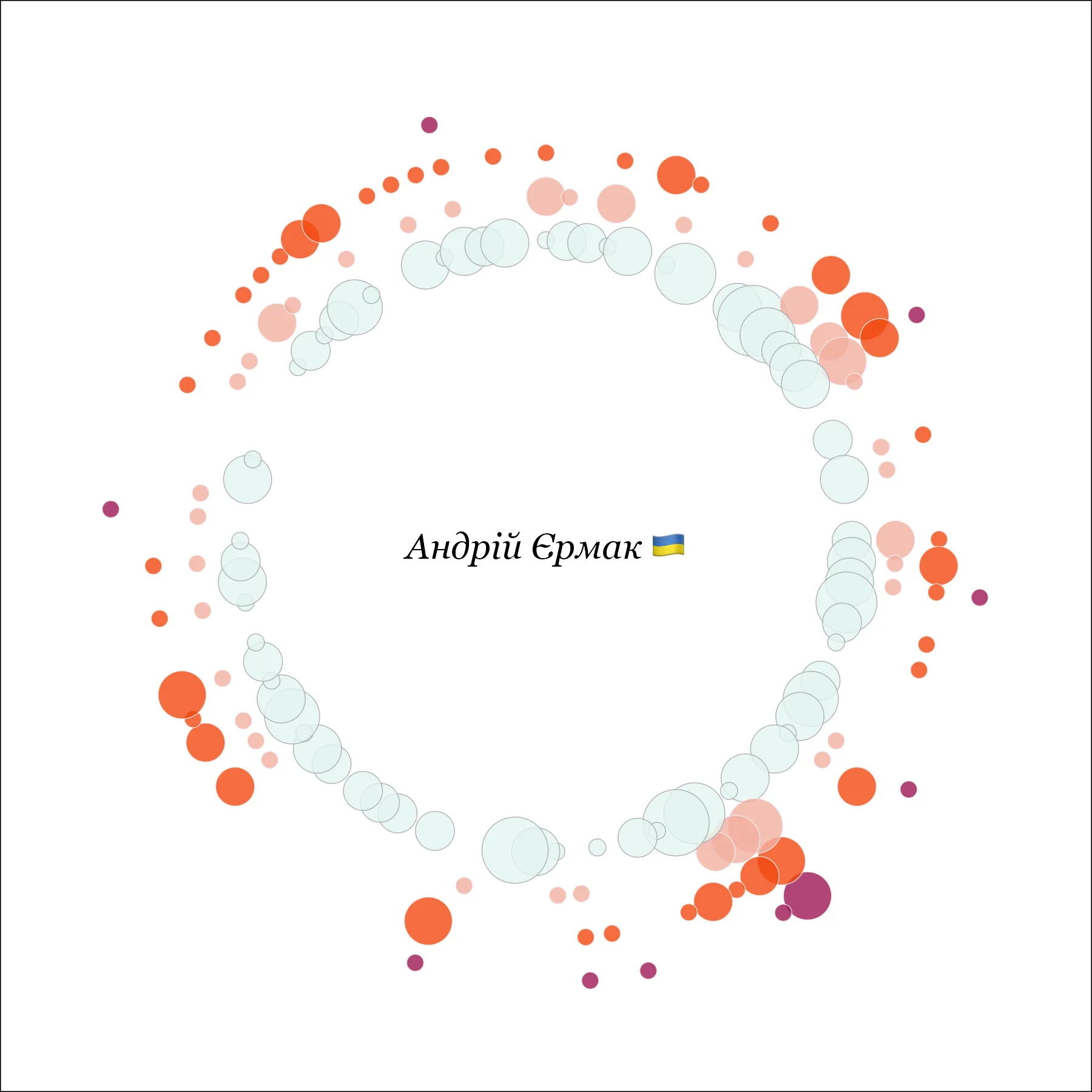

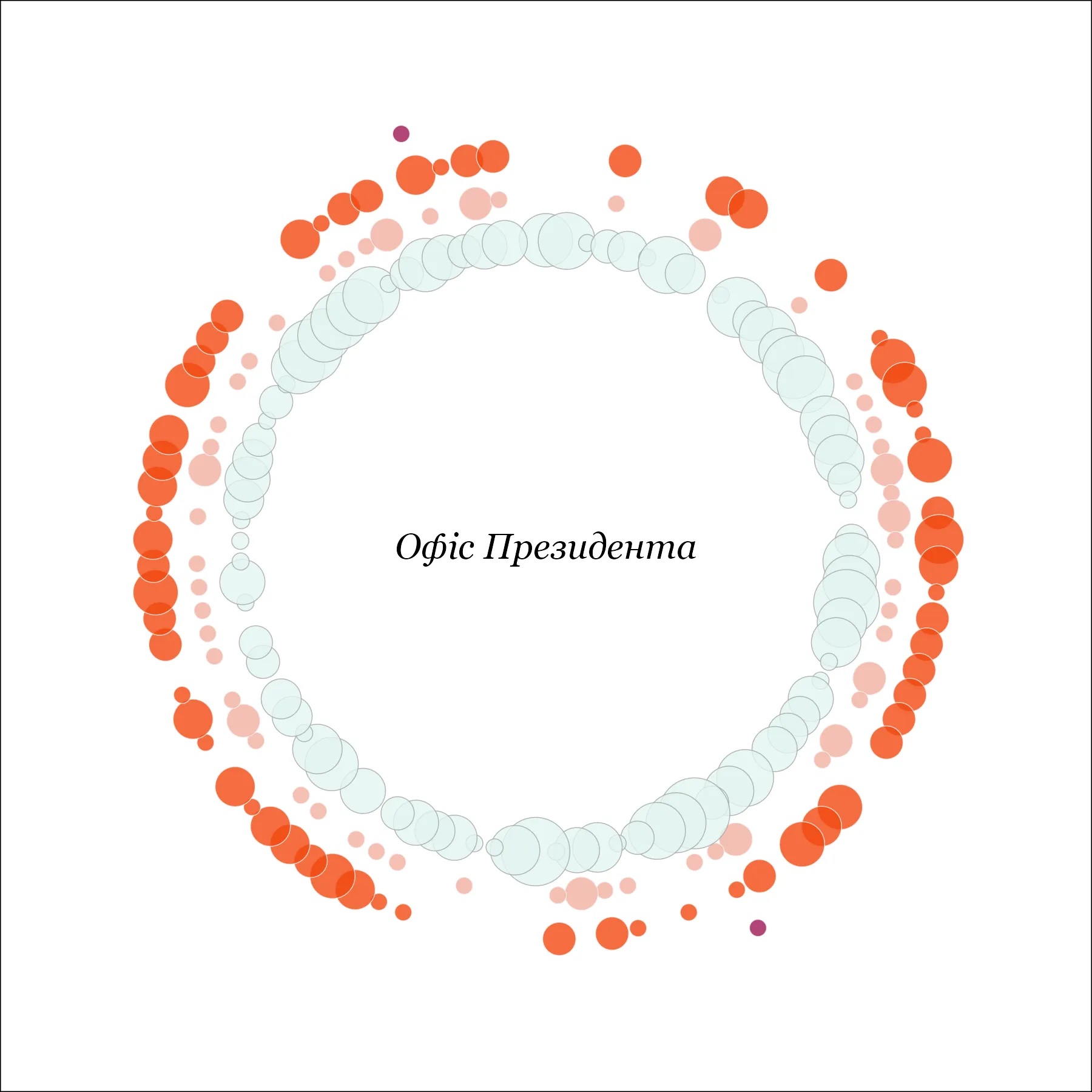

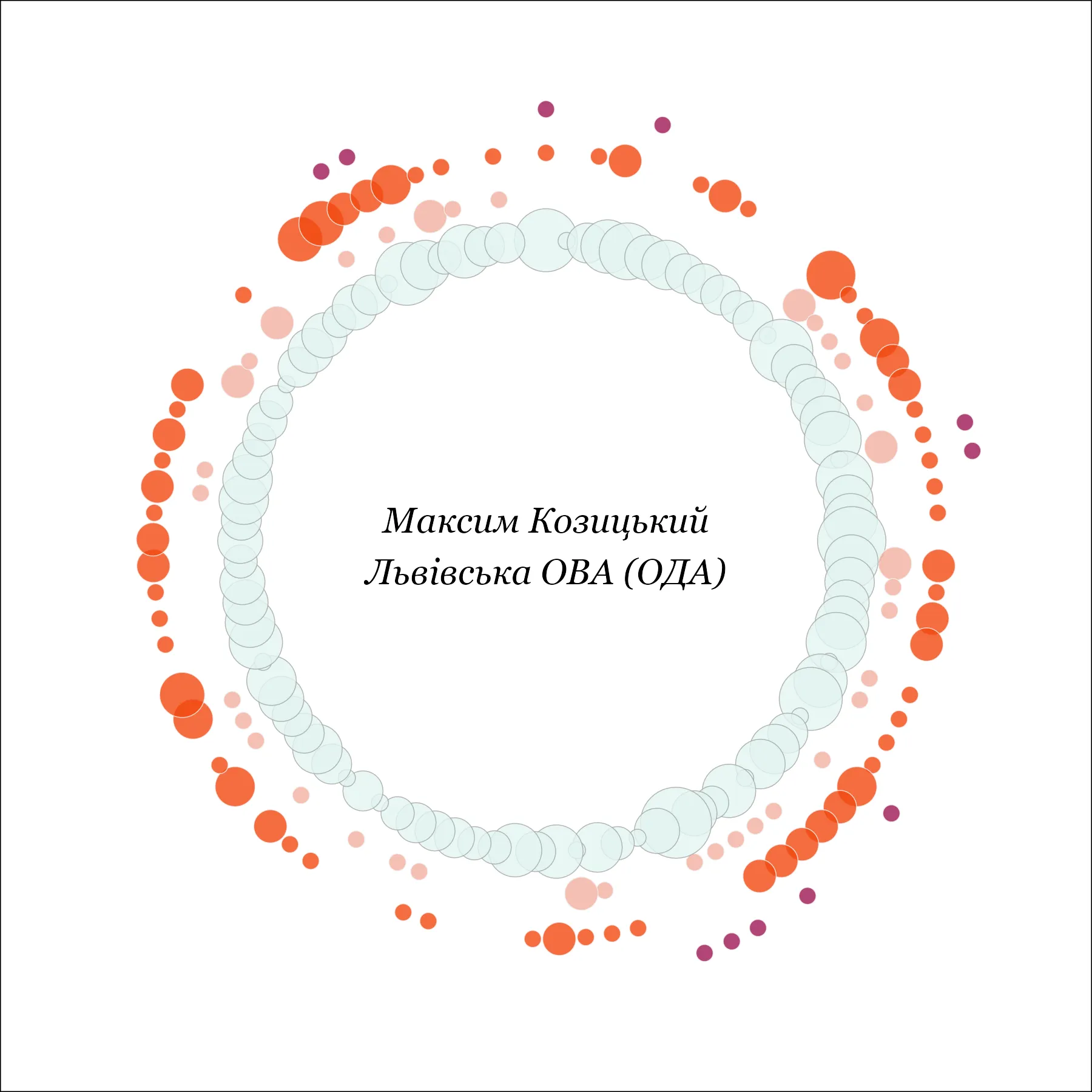

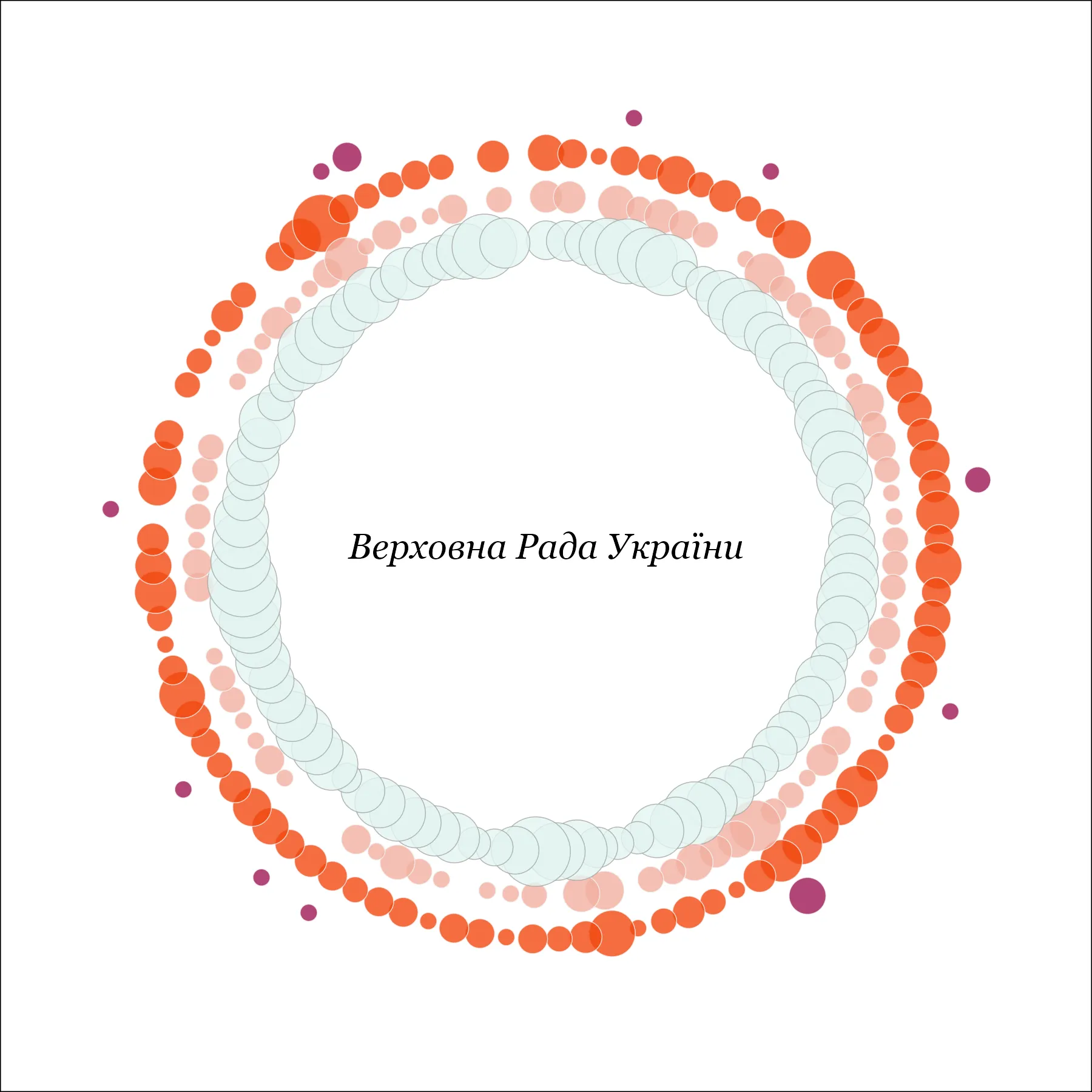

Circles of emotions

The graphs are sorted by the percentage of posts that contain manipulative vocabulary. That is, there may be few posts, but our algorithm has identified most of them as manipulative, and this channel will be in the lead. In some other cases, there are a lot of posts and manipulations, but the overall percentage of manipulative content is lower among the large number of posts.

Pro-Russian

The graphs are ordered from the most manipulative to the least manipulative, from left to right and from top to bottom

The graphs are ordered from the most manipulative to the least manipulative, from top to bottom

Bloggers

State Sources

News Aggregators

Official Newsrooms

METHODOLOGY

We analyzed the 50 most popular public Telegram channels with the most significant number of subscribers in the News and Media category and the 50 most popular channels in the Politics category according to Telemetr.io data as of September 1, 2024. Channels whose posts contain only messages about blackouts, air raids, or the flight path of Russian missiles and drones were preliminarily excluded from this list.

Each post (if it contained more than 100 characters) was checked for the presence of at least one of the following ten manipulation techniques: loaded language; glittering generalities; euphoria; appeal to fear; fear, uncertainty, and doubt; appeal to the people; thought-terminating cliche; whataboutism; cherry picking; straw man (more about each under the spoiler in the body of the article).

The techniques studied were chosen based on the existing expert developments that are the most valid and relevant for the Ukrainian information field. We relied on Ukrainian expertise on the techniques used by Russian propaganda (such as that of Detector Media). Also, we held a focus group with Ukrainian journalists, editors, and analysts who are familiar with manipulation and journalistic standards to clarify controversial issues, discuss what should not be considered manipulation, what is justified in the active phase of the war, and what, on the contrary, should be regarded as the most destructive techniques in the Ukrainian telegram.

We recruited a team of 14 annotators in the data labeling process — mainly experienced journalists, analysts, and media professionals. They underwent specialized training and annotated 9,512 Telegram posts, identifying the manipulation techniques used. To ensure the high quality of the data, we formed a group of five reviewers from our editorial team who checked the annotations and provided feedback to the annotators.

Using an annotated dataset of 7,604 samples, we fine-tuned the Meta-Llama-3.1-8B-Instruct model to detect manipulative content on Telegram. The model's performance was evaluated on a separate test dataset comprising 1,908 examples. The main task of the model is to classify messages as manipulative or nonmanipulative and identify specific manipulation techniques among the ten identified classes.

During the model development process, we carried out multiple training iterations to enhance its performance. Our final model distinguishes between manipulative and nonmanipulative content with an accuracy of 86%. The precision of detecting manipulative posts is 91%, meaning that only 9% of nonmanipulative posts were misclassified as manipulative. The model is quite good at detecting and classifying common manipulation techniques, such as loaded language, glittering generalities, appeal to fear; fear, uncertainty, and doubt. However, it is less effective in detecting more complex and less used techniques, such as appeal to the people, whataboutism and straw man.

We would like to express our sincere gratitude to our team of annotators, whose hard work and professionalism made this research possible: Nataliia Petrynska, Mykhailo Tymoshenko, Volodymyr Lytvynov, Oleksandra Tymoshenko, Yana Kazmirenko, Nataliia Plahuta, Karyna Veretylnia, Margaryta Yakub, Anna Burgelo, Olha Dyachuk, Anna Velyka, Tymofii Lytvynenko, Arsenii Barzelovych, and Oleh Fitiak.