How to respond to tech changes and ensure access to reliable information

Texty.org.ua presents the speech of our editor-in-chief Roman Kulchynskyy at World Media Congress in Krakow on how media outlets are changing during AI revolution.

Who we are and how we started using AI

Our media outlet, Texty.org.ua, has long been using large language models to explore vast amounts of texts. We developed our first algorithm for analyzing Russian disinformation back in 2018. At that time, we downloaded all the news articles from pro-Russian manipulative websites targeting Ukrainian audiences. Then we used machine algorithms to identify the narratives they were spreading. Interestingly, there was no glorification of Russia or Putin — their focus was mainly on domestic Ukrainian politics.

However, the current capabilities of AI are incomparable to the early steps we took seven years ago.

AI is developing at an extraordinary pace, and exponential changes are coming. Of course, this rapid evolution affects the information landscape and the news which people are consuming.

Support TEXTY.org.ua

TEXTY.ORG.UA is an independent media without intrusive ads and puff pieces. We need your support to continue our work.

Fortunately, specially crafted fake news still rarely makes its way into the news feeds of quality media outlets — at least in Ukraine. But once again, everything is changing so fast that no one is immune. And there are already several alarming examples. Let me share it.

How the news are influenced by AI

About a month ago, Slidstvo.Info, a high-quality Ukrainian media outlet, produced an investigation. This story was about a Russian prisoner of war who had previously participated in the occupation of Ukrainian city of Bucha. The investigation was released as a video and became widely discussed. Other media outlets, which primarily work with text formats not videos, decided to create summaries based on it. That's where AI entered the process.

In a small newsroom, nobody had the time to manually transcribe and process a long video. So, it was logical to use AI for this task. The algorithm did a great job — but with a crucial nuance. The AI model invented a bright quote that the prisoner had never actually said. The AI falsely attributed a statement to him — that he had no regrets and wanted to fight against Ukraine again.

I assume that human editors, seeing such a sensational quote, picked it up and placed it in the headline. It started spreading rapidly across the internet — probably even wider than the original investigation. The authors later discovered this mistake, and the editors apologized.

But the damage was done: hundreds of thousands of people had read a fabricated — although emotionally satisfying — quote from a trusted media source.

This case shows that convenient and simple tools require a new, careful approach. Observing this, our editorial team at Texty.org.ua decided to develop our own internal policy on the use of AI, even though daily news is not our main focus.

However, the story with Slidstvo.Info is relatively harmless compared to what is happening deeper in the information environment.

AI-generated content on Facebook

If you dig into Facebook strange communities with hundreds of thousands members, you will see how the creation and spread of fake content via AI has been industrialized and commercialized. Texty.org.ua recently investigated how this system operates.

On Facebook, pages are created and filled with highly emotional, patriotic-looking content.

The Ukrainian segment of Facebook is flooded with photos of supposedly real soldiers, which present like persons "who have not been congratulated on their birthday". This soldiers, really sad and frustrated by this fact, offently has 6-fingers or other “mutations”. Evidently they were created using AI.

Example of AI content on Facebook

Example of AI content on Facebook

Example of AI content on Facebook

It has become both a meme and a symbol of the times. Although many people laugh at these memes, others believe them and engage with them.

These AI-generated emotional images are used by content farms to engage more and more readers. Such farms have existed before, but with AI, their growth and scaling have exploded.

These fake news groups and connected websites also often spread either fabricated tales of catastrophic defeats or fantastic victories. Each post contains a link which leads to a site that isn’t even indexed by Google. In the world created by these Facebook pages and junl news sites, unbelievable events are happening — events that are tied to real news but distorted and fictionalized.

The audience for this content, at least in Ukraine, is largely composed of older women from small towns. We spoke to one of them. She told us that she didn’t even remember subscribing to these pages, but when she read their posts, she became emotional and cried.

We showed her how to subscribe to real media.

But the sad reality is, our pensioners are now trapped in an information "pit" largely created by AI.

The companies behind this business run dozens of Facebook groups and hundreds of low-quality sites. The sites are primitive, created by AI, filled with AI-generated content, and promoted across the network by AI tools.

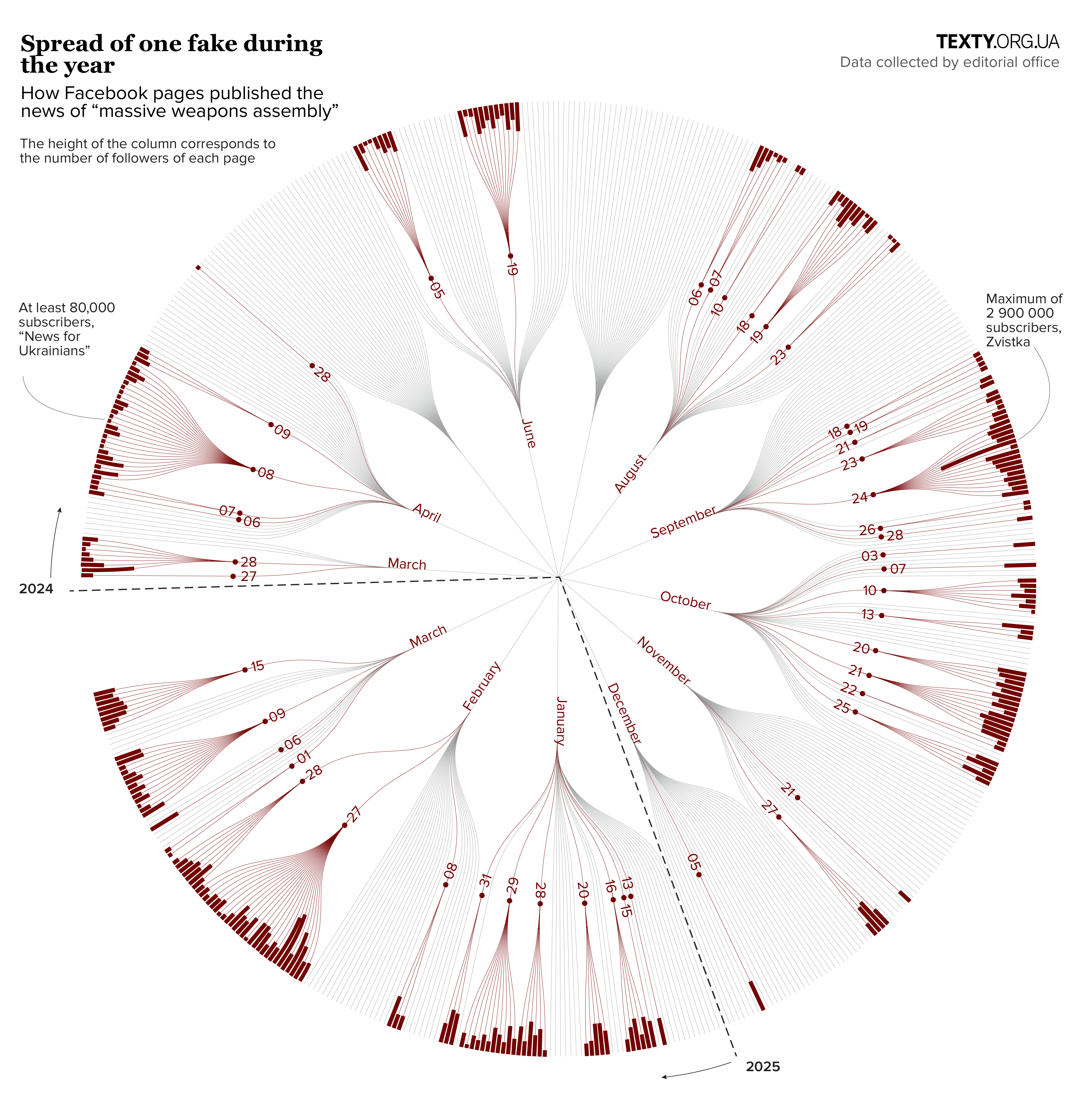

These sites and groups work in circles — repeating the same fake news over and over. In one case, we found that 76 pseudo-patriotic pages had published the same false report about the surrender of 60,000 soldiers at least 250 times in total.

Interestingly, many users report this content to Facebook. And while Facebook occasionally blocks the links, new, identical websites are quickly created — again, with the help of AI.

Support TEXTY.org.ua

TEXTY.ORG.UA is an independent media without intrusive ads and puff pieces. We need your support to continue our work.

Russian propaganda and AI creators

We've been studying Russian disinformation for a long time. That’s why when we started reading this fake news on Facebook, we immediately saw that in addition to the usual clickbait, they share what Russia usually spread to the Ukrainian audience - total disbelief in their own country.

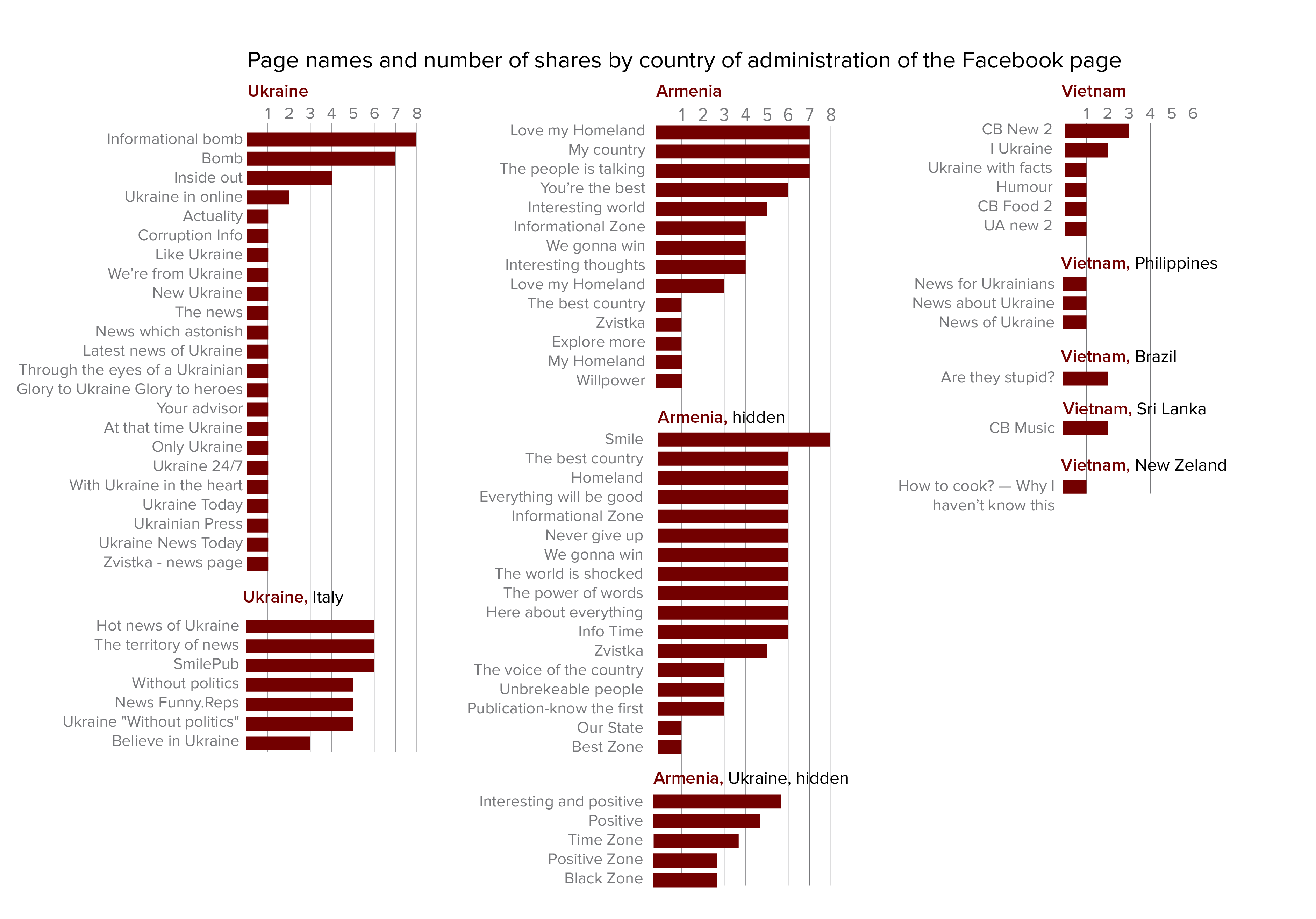

We identified one coordinated network of Facebook groups and websites that operate in sync and are administered from various countries around the world, making it difficult to establish a direct connection to Russian intelligence.

However, what they spread clearly coincides with the Russian narratives we've been researching for years.

But representatives of this business say that they are just using informational space and making money from advertising.

Fortunately, such false content has not yet reached mainstream Ukrainian media, but thanks to social media, it is consumed by a significant number of people.

Finally, I'll give a third example. NewsGuard, a research organization, describes an interesting case of reality distortion with the help of AI. It turns out that Russians have created a network of websites that publish tens of thousands of AI-generated texts every week. Evidently, these texts contain all the main narratives of Russian propaganda. But does a single living person visit these sites?

The answer is unexpected and predictable at the same time. The content is made for web-crawling bots to fill text databases used in training language models

Nobody will notice it, but someday, a tech mogul from California or a journalist from another part of the world might ask the new, powerful, and practically free Chinese language model about Ukraine. They could be told that there is no such country, only a Russian province.

This can and should be countered. We are currently working on an article to highlight which language models have biases against Ukraine. The more attention traditional media pays to how artificial intelligence works, the cleaner the information environment will become However, a significant battle lies ahead.